Yes, you are using AI

Pretending otherwise is a security risk.

A senior leader at a $4B annual revenue bank once told me this about AI governance:

Currently there is no such requirement for {{company_name}} as a policy in-place prohibits AI solutions.

So I went to his company’s web site and found this:

Later that same week another security leader at a separate financial services firm told me:

We don’t use AI, so not something we are concerned by

A quick review of this company’s web site turned this up:

These conversations are so common for me that I actually needed to develop a framework addressing them. So here are the three failure modes of “we don’t use AI” and what to do about them:

1. The company is officially using AI, but no one told the security team

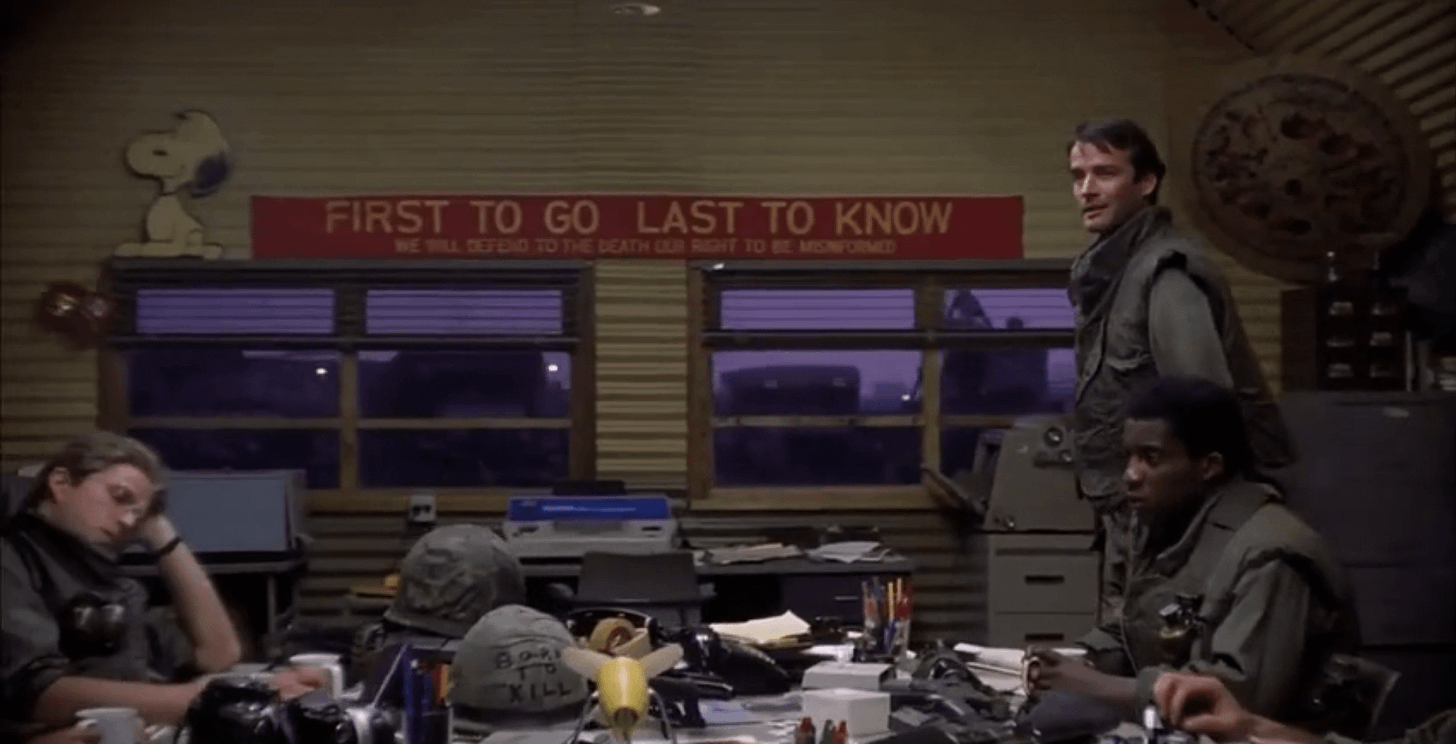

This reminds me of a scene from the Stanley Kubrick film Full Metal Jacket:

Product and marketing teams moving fast and not informing their security peers happens all the time (“last to know”). Simply waiting to be told about new product or technology rollouts means you are in reactive mode (“first to go”).

Here’s what security leaders can do:

Understand your company’s business objectives and roadmap.

Inventory your (AI) systems and create a process for updates.

Consider automated discovery tools.

2. There is a formal policy in place “banning AI” but shadow use continues

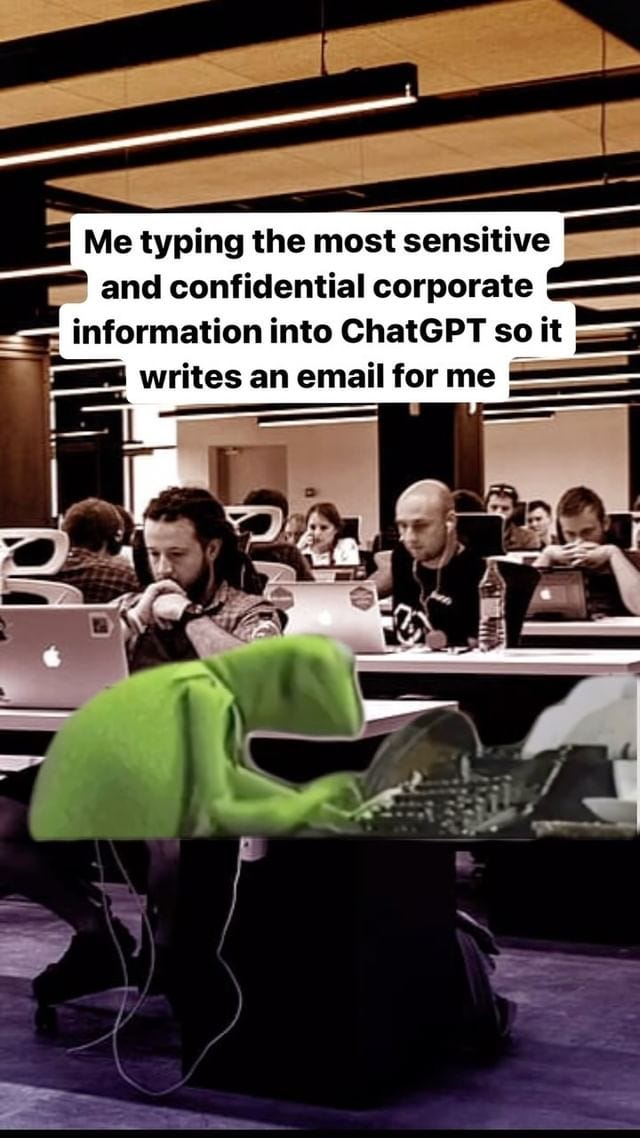

I can sum this up by sharing an Instagram video which got over 36,000 likes:

And to back it up with data, according to one survey 8% percent of employees at companies banning ChatGPT admitted to using it anyway!

If almost a tenth of your employees boldly admit to violating your policy, it’s probably time to revisit it. Here are my recommendations for avoiding this failure mode:

Train employees on best practices on AI retention and training.

Create a clear procedure for onboarding new tools.

Don’t just try to block every new AI app.

3. Third (and greater) parties are integrating AI into their applications, which are already approved

Many companies are racing to roll out new AI-powered features, often built on top of existing generative AI service providers like OpenAI and Anthropic. This can create 4th party processing and retention risk, which is difficult to track and manage. I wrote an entire article on this issue, but my recommendations are:

Use a structured approach to documenting your supply chain like StackAware.

Continuously monitor your 3rd+ parties (see StackAware’s API).

Consider limiting subprocessing in your contracts.

Need to get a handle on your AI use?

Burying your head in the sand isn’t going to help. While I’m sure there are some companies out there with 100% compliance for their “no AI” policy, they are rare.

The best approach is to analyze and manage risks proactively. That’s exactly what StackAware does for you. Leveraging established approaches like the National Institute of Standards and Technology (NIST) AI Risk Management Framework (RMF) and ISO 42001, we assess your risk and build a governance program for you.

Ready to learn more?