Use ChatGPT Team without having your data retained forever

OpenAI confirms something I've suspected for a while.

ChatGPT Team released last week and one of my clients (wisely) asked about its security. I signed up myself and here's what I found.

Model training

This is off by default (and cannot be turned on by anyone, including admins). From a security perspective this is an improvement from the consumer version, where training is on by default (but you can opt-out).

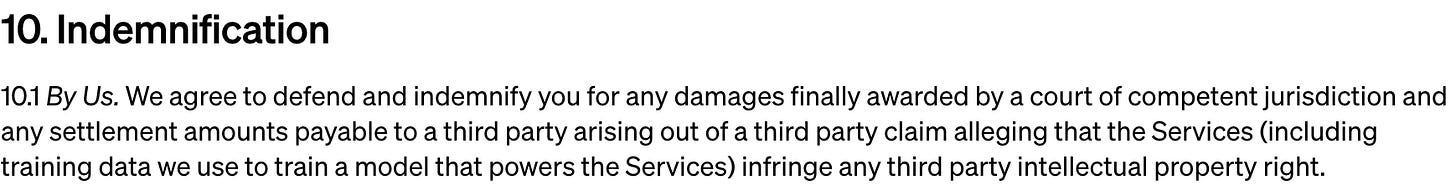

Indemnification

Reminder: I am not an attorney and this is not legal advice.

ChatGPT Team also appears to bring customers under OpenAI’s business terms of service and thus the “Copyright Shield” whereby they will defend you against third-party claims that you are infringing on that third-party’s intellectual property.

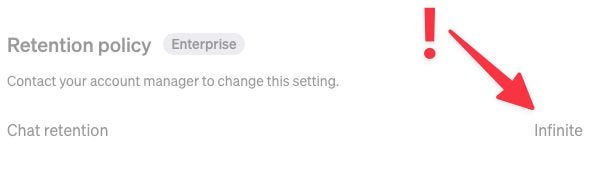

Enterprise controls

These are all grayed out, even for admins, so you need to upgrade to Enterprise to set up Single Sign-on (SSO) and modify retention periods, which brings me to the final topic:

Data retention

This kind of blew my mind, because OpenAI appears to be saying the quiet part out loud here.

Their Privacy Policy has the typical legalese regarding data retention:

We’ll retain your Personal Information for only as long as we need in order to provide our Service to you, or for other legitimate business purposes such as resolving disputes, safety and security reasons, or complying with our legal obligations. How long we retain Personal Information will depend on a number of factors, such as the amount, nature, and sensitivity of the information, the potential risk of harm from unauthorized use or disclosure, our purpose for processing the information, and any legal requirements.

This is understandably vague and I have long advised to treat this is as basically meaning “forever,” even though that’s not exactly what it says.

But if you look at the chat retention option in ChatGPT Team, it is actually set to “Infinite” and cannot be changed!

Thus it seems that OpenAI marketing and product management are saying something different than legal via the Privacy Policy. And chats are “Personal Information” according to that document:

When you use our Services, we collect Personal Information that is included in the input, file uploads, or feedback that you provide to our Services (“Content”).

This isn’t the first time we’ve seen some disconnect between different parts of the organization when it comes to security. I understand that things can be disjointed when you are moving fast but this is a little concerning.

Potential workarounds

Get ChatGPT Enterprise

Whether this counts as a workaround is debatable, but if you sign up for Enterprise, administrators have control over data retention:

I’ll note that several of my clients have had difficulty even getting OpenAI on the phone to discuss buying Enterprise. Since it isn’t available in a self-service manner, this may hold you back. Additionally, I have heard from multiple sources that OpenAI is quoting potential buyers at $60/month/user with a minimum of 150 users and a 12-month commitment. $108,000/year for a single software product is out of reach for many businesses.

Disable chat history Use temporary chats

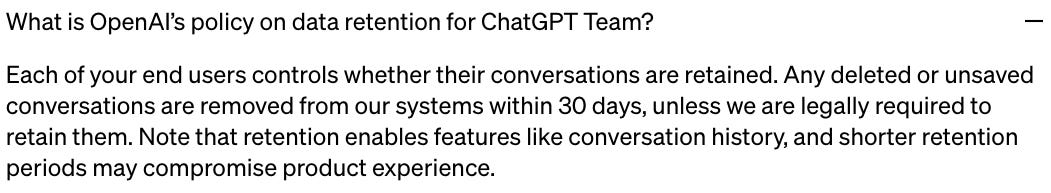

If you are worried about data retention when using ChatGPT Team, you and your team can turn off chat history to take advantage of the 30 day (vs. infinite retention period).

You cannot enforce this globally throughout the organization - doing so requires ChatGPT Enterprise. Unfortunately, relying on individual employees to manually implement security measures is a recipe for failure.

And this will disable certain features like custom GPTs. (Update May 2, 2024) OpenAI has killed off the disable chat history feature, but replaced it with Temporary Chats. This means conversations are only retained for 30 days when in use, but it requires manually turning them on and cannot be enforced throughout the organization:

Manually delete all (sensitive) conversations

If you still want to use custom GPTs, then you may be able to just manually delete conversations when you are done with them. OpenAI’s Enterprise Privacy section states:

Similarly to turning off conversation history, this requires manual employee action so I wouldn’t count on it to be airtight.

Use the Playground

If you need to use custom GPTs, you might consider building them as Assistants instead (or in addition). Please see this article for a description of the difference and why the latter is general more secure. Anyone who is part of your organization can view all available Assistants and interact with them using the Playground.

(Update: 1 February 2024) A key question, here, which remains outstanding, is whether interactions using Although this wasn't clear to me from their documentation, OpenAI confirmed to me after I asked that the Playground follows the OpenAI API terms of service and enterprise privacy conditions. The latter promises a 30 day maximum retention period (and potentially 0 if you are approved for zero data retention [ZDR]), barring a legal hold).

(Update: 1 February 2024) I asked OpenAI on January 14, 2024, and received a very quick response that was entirely boilerplate and did not address my question. Others appear to still be wondering the same thing.

Another downside here is that anyone at your organization with access to the API can see your conversations (see the post I mentioned above for an explanation of how Threads and Assistants work). After doing some sleuthing and checking this forum discussion, it looks like the risk here would be minimal, though, because one needs to know the Thread ID in order to request its contents.

But if you have strict internal compartmentation requirements, this won’t work.

Additionally, you are not able to save your chat history like you can with the user interface. But this forced deletion (as opposed to the default preservation of the approaches described above) will be more secure.

Finally, this will change the pricing model for your usage. It will shift from “all you can eat” (at least for GPT-3.5, because ChatGPT Team has a 100 message per 3 hours period limit for GPT-4) to a per-token model.

Balancing risk and reward with AI

If this all seems complex…it is.

Decisions about whether to tolerate infinite data retention in order to leverage certain features can be difficult. And will eventually come down to your risk appetite.

But if you need some help in making sense of the AI landscape when it comes to:

Cybersecurity

Compliance

Privacy

I can help.

Related LinkedIn post