Opt-out of ChatGPT training

At least for now, better safe than sorry.

TL;DR

Go to this opt-out form.

Enter your email address.

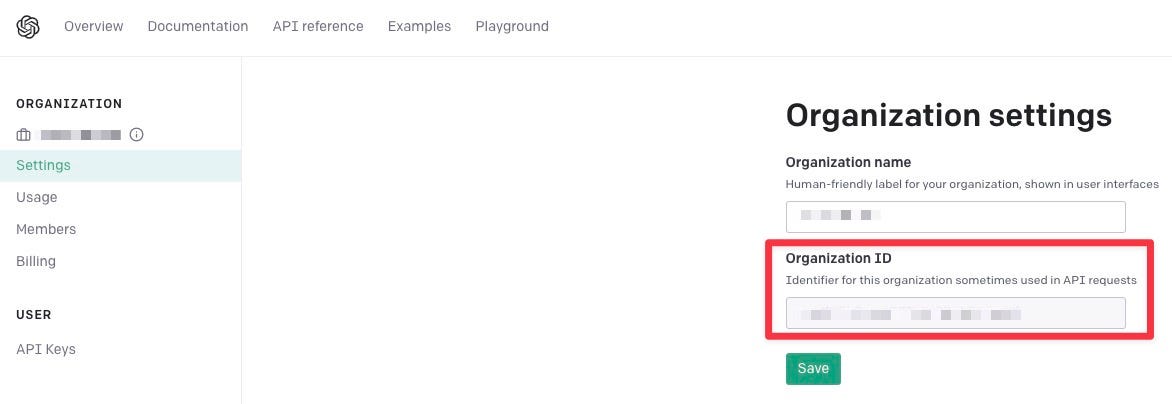

Find your OpenAI Organization ID

Go to this page and log in.

Click the “Settings” tab and find your Organization ID and name as per below:

Copy the Organization ID and name.

Paste them into the form described in step 1.

Hit “Submit.”

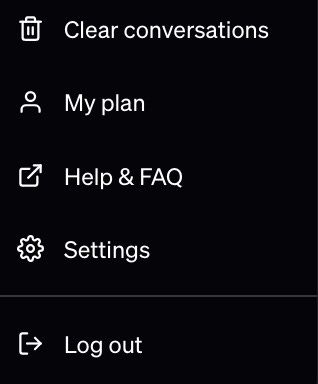

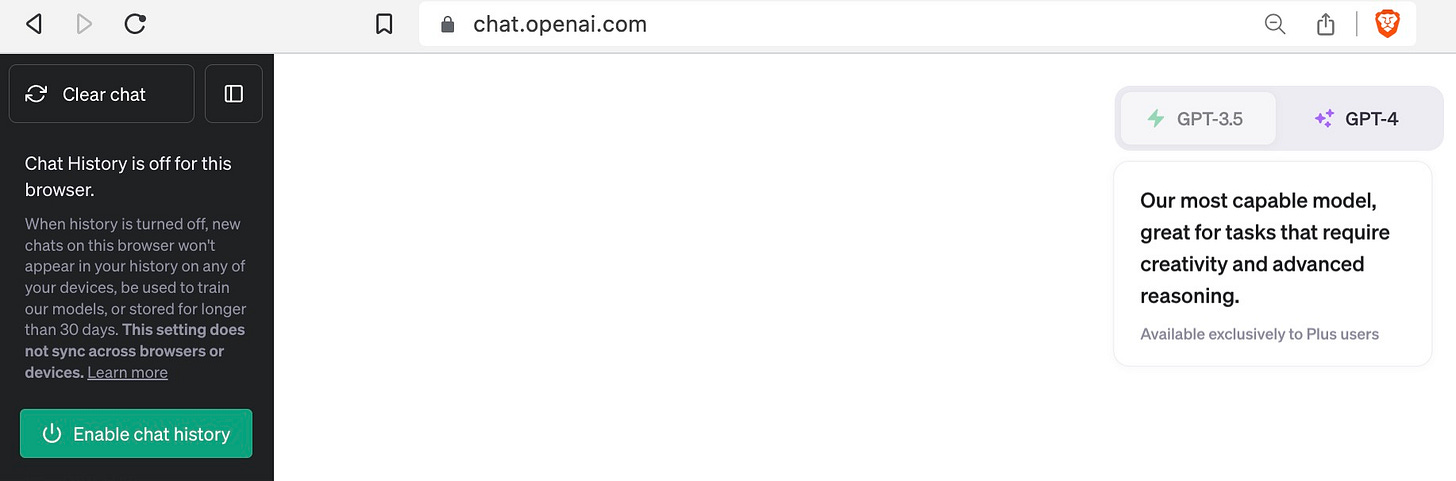

(This and below added 28 June 2023) Navigate to the ChatGPT app.

Click on the ellipses (…) on the lower left of the screen by your name.

Click on “Settings.”

Click on “Data Controls.”

Move the slider for “Chat history & training” to the off position.

Why you should do this

I recommend reviewing OpenAI’s documentation.

From their ChatGPT FAQ:

Will you use my conversations for training?

Yes. Your conversations may be reviewed by our AI trainers to improve our systems.

From their privacy policy:

We’ll retain your Personal Information for only as long as we need in order to provide our Service to you, or for other legitimate business purposes such as resolving disputes, safety and security reasons, or complying with our legal obligations. How long we retain Personal Information will depend on a number of factors, such as the amount, nature, and sensitivity of the information, the potential risk of harm from unauthorized use or disclosure, our purpose for processing the information, and any legal requirements.

From these statements, it seems reasonable to assume OpenAI will indefinitely retain every single piece of information you provide to ChatGPT and use it to train its artificial intelligence (AI) models (“as long as we need in order to provide our Service”).

While most Americans don’t especially care about this type of thing (evidenced by the complete ubiquity of “free” services that harvest and analyze user data for profit), if you are reading Deploying Securely, you probably aren’t like most people.

There are many reasons you might not want your data to be used for training purposes, such as:

Privacy

You don’t want the information underlying your prompts appearing in response to someone else’s questions.

OpenAI has a decent blog describing the privacy implications of ChatGPT and its training process. And if you are in a position to invoke certain laws [like the European Union (EU) General Data Protection Regulation (GDPR)], you can make a Personal Data Removal Request.

Cybersecurity

A crafty attacker could probe ChatGPT to see if employees from a potential target had provided it with things like passwords, API keys, or other secrets. The craftiest of them might even get ChatGPT to regurgitate this or other sensitive data.

Intellectual property protection

You don’t want to accidentally solve a major problem for a competitor. Amazon, for example, apparently suspects ChatGPT has already ingested some of its confidential information from Amazon employee interactions, and that the AI is reproducing Amazon information in response to certain prompts.

Opting out is not a silver bullet

Even if you do opt out, keep in mind this additional point from the FAQ:

Who can view my conversations?

As part of our commitment to safe and responsible AI, we review conversations to improve our systems and to ensure the content complies with our policies and safety requirements.

So even if OpenAI isn’t training its models on data you provide to it, the company still does retain this information and OpenAI employees and contractors may view it. And if OpenAI gets hacked or otherwise leaks your data, it can end up in the wrong hands. This creates yet another set of privacy, cybersecurity, and intellectual property risks.

As a result, you’ll want to have a solid security policy in place governing how your employees interact with generative AI tools like ChatGPT.

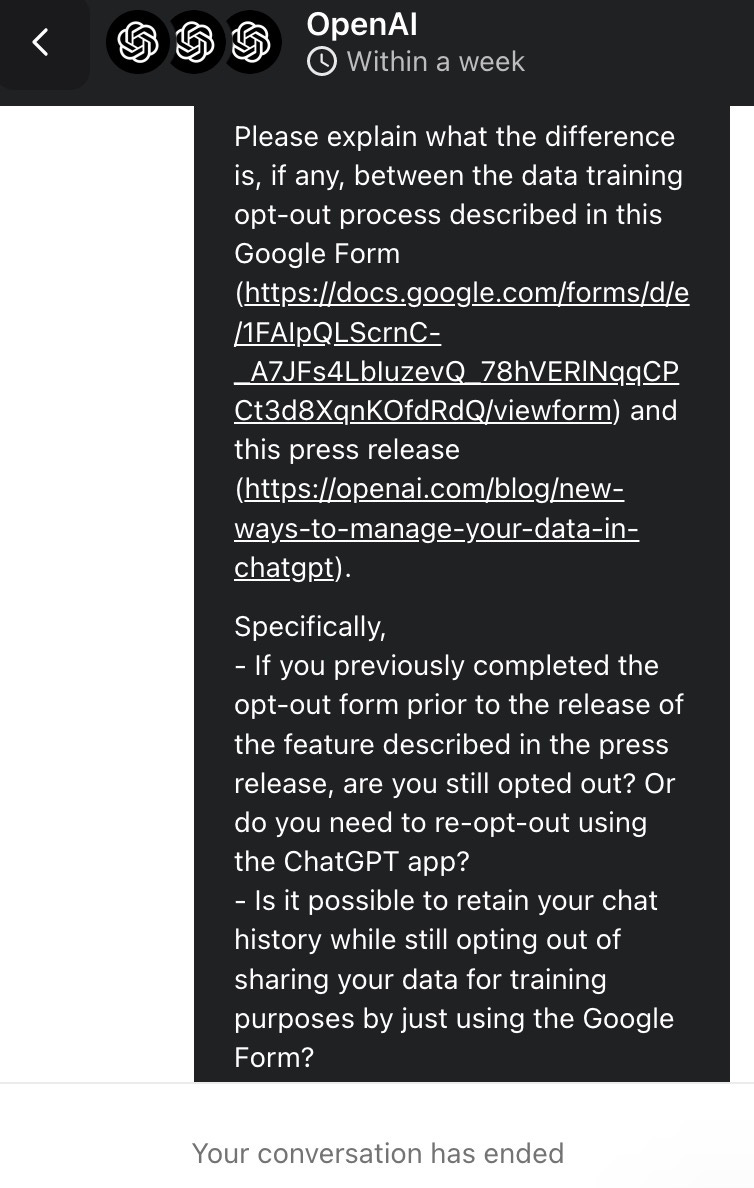

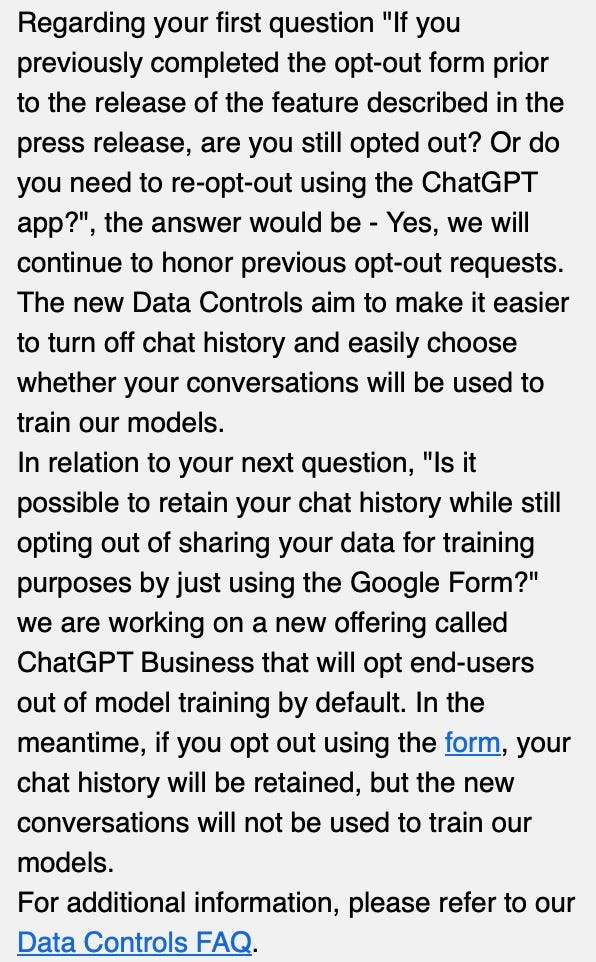

(Update 28 June 2023) In an earlier feature release, ChatGPT introduced the ability to opt-out of training on your inputs using the app directly. I sent an inquiry to OpenAI on this topic:

Response received 23 July 2023:

Additionally, and somewhat ominously, it looks like the GPT-4 browsing and plugin features are disabled when you turn off chat history, representing a “dark pattern.”

The API is the most private way to interact with ChatGPT

As of March 1st, OpenAI changed its policy such that:

OpenAI will not use data submitted by customers via our API to train or improve our models, unless you explicitly decide to share your data with us for this purpose. You can opt-in to share data.

Any data sent through the API will be retained for abuse and misuse monitoring purposes for a maximum of 30 days, after which it will be deleted (unless otherwise required by law).

Being a primarily commercial service, the ChatGPT API appears designed to reflect the less lopsided power dynamic between OpenAI and its business customers (compared to the individual consumers who primarily use the chat interface).

So if you want the most secure and private experience when using the tool, use the API.

Why you might not want to be a free rider…

With all of this said, everyone who uses ChatGPT is leveraging data generated by others, so there is definitely a free-rider problem here. If everyone opts out of data sharing, then the model would improve much more slowly. And if every content owner on the internet added a paywall, blocked ChatGPT from crawling their site, or took other measures to restrict it from ingesting their data, then there would be nothing for it to train on.

As an AI optimist and advocate, I think there is a balance to be had here. But since we are in the early days of generative AI, things are kind of the Wild West right now. And as a content creator myself I am concerned that ChatGPT and similar tools are free-riding off of me (more to follow on this specific topic)!

Thus, I think it’s perfectly reasonable and ethical to opt out of data sharing to safeguard against the aforementioned harms. As OpenAI and other organizations develop a more effective governance structure and put more safeguards in place, I’m open to revisiting my position here. And if you are only using ChatGPT for relatively basic queries that contain information already public-available, you might consider not opting out.

Worried about ChatGPT and other AI tools training on your data?

StackAware helps organizations protect their intellectual property and other sensitive information while still leveraging AI to improve productivity.

Need to figure out how to balance the two?

Update (13 Nov 2023) - see this post for how to opt-out of sharing and training when custom-building a GPT.

This article was originally inspired by Kai Uhlig’s LinkedIn post.