Manage 4th party AI processing and retention risk

Map artificial intelligence tools throughout your supply chain.

Check out the YouTube, Spotify, and Apple Podcast versions.

Having a consistent and logical framework for managing information risk from 3rd (and greater) parties is harder than it seems, as I have written about in the past.

And AI makes the problem even more challenging.

That’s why having a policy that extends throughout your digital supply chain is vital. Otherwise, you are just playing whack-a-mole.

For example, suppose you ban processing confidential information with third-party generative AI tools. This is a fairly restrictive approach, but if that’s what your risk appetite dictates, do what you need to.

Asana Intelligence - Example 1

Unfortunately, it’s quite possible your already-approved tools (which are not themselves dedicated generative AI platforms) are integrating generative AI capabilities into them (which can be disabled in this case). And these can introduces fourth-party AI risk, like in the case of Asana:

You can implement all the:

disciplinary measures

IP blocking

firewalls

you want, but if you don't address the fact your already-approved tools are passing confidential information to:

OpenAI

Anthropic

and others

it’s pretty much a waste of time and effort.

Need help mapping your AI-related risk?

And in any case, you need to understand how data is being processed and retained throughout your entire supply chain. If a 4th party is retaining your confidential information indefinitely, that greatly increases your risk surface due to the necessarily higher risk of a breach over an infinite timeline.

And at least Asana is clear about exactly what they are doing. For an example of something more vague, look at Databricks Assistant, an AI copilot.

Databricks Assistant - Example 2

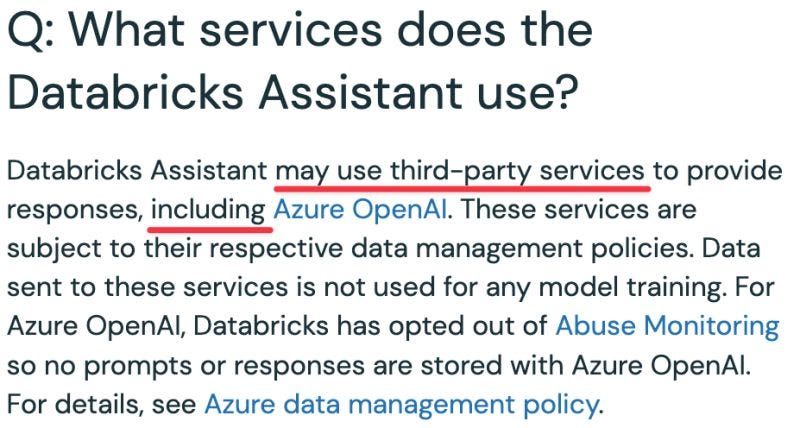

(Update: 8 January 2024) While the company has a good FAQ about how things work, there is a gaping whole obscured by some clever wording.

They talk a whole lot about Azure OpenAI and all the security measures in place, but that is only ONE of the options used for processing! The others are unnamed.

While it’s good to know they aren’t training on user inputs, it would be good to know which other tools Databricks is leveraging and what their retention policies are. Anthropic, for example, has a default 28 day retention period for enterprise users while Azure OpenAI (in this case) doesn’t retain any prompts or outputs.

The fact that they don’t identify the other third-party services and index so heavily on Azure OpenAI is somewhat concerning because it suggests they are trying to bury something customers might not be happy about.

Based on public information, there is no way to be sure. But this is why transparency is the best policy.

(Update: 8 January 2024) A member of the Databricks security team provided the following response:

Today Databricks Assistant only uses Azure OpenAI. Given the continual evolution of new models, the language you highlighted was included to indicate that Databricks Assistant (now an in the future) may use models provided by Azure OpenAI, another third-party service, or models provided by Databricks. If a new third-party service model was used that wasn't provided by a supplier already included as a subprocessor then the Databricks subprocessors would be updated.

Atlassian Intelligence - Example 3

From a pure data retention and security perspective, Atlassian Intelligence’s Trust Center is probably the most favorable example. This feature leverages OpenAI to generate responses to questions about organizational data.

From this, it looks like Atlassian has negotiated a zero data retention (ZDR) agreement with OpenAI. Thus, assuming this statement is accurate, your 4th party retention risk is negligible. The only way an attacker could capitalize on this exposure would be to somehow intercept the data as it is being processed by OpenAI. While possible, this seems highly unlikely.

Unfortunately, the Atlassian Intelligence terms and conditions are much more vague and refer back to the Trust center:

Considering that these would likely take precedence if there was any dispute, I would focus here. It seems like a simple update to the Trust Center could radically alter how your data is used and retained. I understand Atlassian’s goal here, which is likely to prevent the chance of having conflicting or duplicative descriptions of how they handle data (like I found is the case with GitHub Copilot). But this is potentially concerning.

And these terms slap an additional (and gratuitous, in my opinion) requirement on you to not pass off anything from the tool as human generated. Not sure why Atlassian would care about this at all - it seems like entirely a customer issue to deal with.

Zoom AI Companion - Example 4

This is the most complex example of 4th party AI processing risk that I have encountered. According to to their AI Companion data handling article:

Zoom is:

developing its own Large Language Model (LLM),

operating its own instance of Llama 2 (probably in their own Infrastructure-as-a-Service (IaaS) environment), and

using Software-as-a-Service (SaaS) models from

OpenAI

Anthropic

This is a hugely complex web of 4th parties. And their retention policies are a little bit vague, so it’s not entirely clear who is keeping what data and for how long.

This setup is not necessarily wrong, and they do make clear that these models aren't training on your data. But having so many different repositories for your information, with unclear retention periods, can potentially increase your cyber and compliance risk.

Don’t confuse this with unintended training

The above examples represent a separate issue from unintended training, whereby you accidentally train an AI model on data you wish you hadn’t. I’m just talking about processing and retaining sensitive data here, which basically every organizations already lets third parties do.1

Obviously you should be concerned about whether fourth parties are training on your data as well, but that doesn’t appear to be happening in either of these examples.

AI governance is not optional

This is just one example of why you need a comprehensive approach to AI governance that lets you manage:

Cybersecurity

Compliance

Privacy

risk without slowing down the business.

With the right framework in place, you can leverage AI for huge productivity gains without undue risk.

So let us do an risk assessment for you:

Related LinkedIn posts

Training does represent a form of processing, but is more worrisome from a security perspective because of the potential for the underlying data (or derivations of it) being exposed unintentionally to other parties using the same AI model.