Revealing the government's approach to vulnerability management

A deep dive into the multitude of federal systems for prioritizing known software security flaws.

Editorial note: After extensively researching the federal government’s vulnerability management practices, I have assembled my research here. My goal is to provide a formal roadmap for reform useful to both policymakers and practitioners alike.

Executive Summary

Like most organizations, the federal government must contend with a vast array of known software vulnerabilities in its systems and networks. Major breaches of government and private sector organizations have shown that correctly prioritizing these issues for remediation is a vital cybersecurity task.

Unfortunately, there exists a wide array of statutes, directives, and other guidance that drives federal efforts toward this end. In addition to the existing bifurcation between the bulk of federal agencies and the Department of Defense (DoD)/Intelligence Community (IC), neither grouping of organizations has an internally-consistent approach.

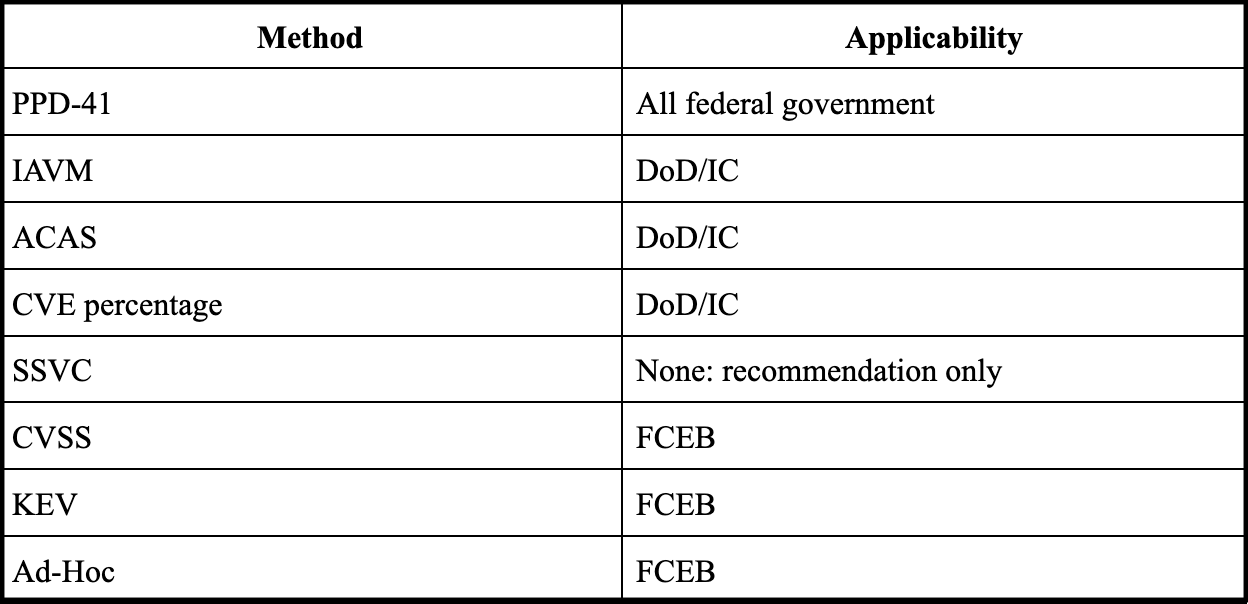

Furthermore, the federal government relies heavily on qualitative vulnerability descriptors which make it difficult to describe risk in quantitative terms. I identified eight nine (Updated 26 May 2023 - see footnote) different such systems in use by the federal government, all of which use varying and non-interoperable scales and rubrics. This lack of cohesion makes comparison between issues challenging and analysis of tradeoffs nearly impossible.

To address these issues, the I recommend:

The development of a government-wide quantitative standard for measuring the cybersecurity risk posed by known software vulnerabilities.

Enhanced use of machine-learning and other automation techniques to more effectively predict the probability of malicious exploitation of all known vulnerabilities in federal networks.

Thorough, continuing, and automated asset inventories and business impact analyses by all government agencies to better understand the damage that would result from such exploitation.

A formal study by the Cybersecurity and Infrastructure Security Agency regarding the relative risks posed by both known and unknown software vulnerabilities. If appropriate, the government should reallocate resources accordingly to address the category creating the greatest risk.

Introduction

A recent scan of the .mil domain using a publicly-available network search engine revealed 395 Common Vulnerabilities and Exposures (CVE) on web sites with internet protocol addresses locating them inside the United States.1 One site, chosen at random, had multiple CVEs - detectable from the internet - rated “High” or “Critical” according to the Common Vulnerability Scoring System (CVSS).2

Even within a logically and geographically limited set of parameters, this would seem to be an extremely large number of severe vulnerabilities. A casual observer might expect that government networks are more or less continuously penetrated by all means of malicious actors as a result.

Unfortunately, appearances can be very deceiving.

The fact is that modern vulnerability scanning tools and management practices generate huge quantities of findings but make it extremely difficult to identify truly important issues that create risk for organizations. Both public and private sector entities find themselves dealing with a never-ending stream of seemingly high priority issues as a result.

And despite massive efforts to stem the tide, massively damaging data breaches resulting from known vulnerabilities - from the Office of Personnel Management (OPM) to Equifax - continue to plague both government and industry.3

The huge volume of findings along with the difficulty in evaluating them means that the biggest underlying problem in vulnerability management is one of prioritization. Through a combination of technical and organizational solutions, the federal government could greatly improve its ability to sort through the array of findings with which it must contend on a daily basis.

A fractured federal landscape

Because it maintains a vast quantity of sensitive and valuable data, the federal government is a frequent target for attackers. Unfortunately, it still struggles to manage the risk created by known software vulnerabilities.4 Even worse, the government has no clear philosophy or program for contending with this risk. One cybersecurity professional with over a decade of experience managing vulnerabilities for the Department of Defense and Federal Civilian Executive Branch (FCEB) agencies summarized existing guidance in the topic as “disjointed.”5

While the Cybersecurity and Infrastructure Security Agency (CISA) has the authority to mandate the cybersecurity posture of non-defense and -intelligence agencies, often referred to as FCEB organizations, its publicly available directives provide a wide range of conflicting guidance.6

The National Institute of Standards and Technology (NIST), part of the Department of Commerce, also promulgates a range of binding and non-binding guidance related to vulnerability management. These standards are generally high-level and provide little guidance for implementation.

The recently established Office of the National Cyber Director (ONCD), ostensibly meant to coordinate the government’s various cybersecurity programs, has not dived deeply into the specific topic of vulnerability management, having kept its pronouncements at the strategic level as of this writing.7

The Office of Management and Budget (OMB) has grappled with the topic on various occasions, but has also kept its statements vague.8

Outside of the agencies operating under CISA mandates, the defense and intelligence communities have pursued their own course. While relatively little is available publicly regarding their practices and procedures in the realm of vulnerability management, what is available suggests a similar lack of consistency.

The disastrous consequences of ignoring exploitable known vulnerabilities makes this a problem the government must address.

The state of vulnerability management

A recent automated analysis of the networks of 1,623,118 organizations found that 53% had at least one internet-exposed vulnerability.9 Anecdotally, available information suggests that some private sector enterprises can have 40,000, even 130,000 known vulnerabilities in their systems.10 The NIST’s National Vulnerability Database (NVD) receives dozens of reports of new CVEs every day, with well over half of these rated “high” or “critical” severity according to the Common Vulnerability Scoring System (CVSS).11

Although the onslaught of cyber attacks - especially the recent burst of ransomware - is substantial, it does not seem reasonable to presume that most or even many of hundreds of thousands of organizations with exposed vulnerabilities have been or will imminently be breached.

The reason for this is that vulnerability scanning tools see the world very differently from hackers.12 The former generally evaluate networks and components for all hypothetical weaknesses and flaws present, irrespective of the real-world likelihood that an attacker might use them. The latter, of course, are focused on identifying - and in the case of the non-ethical sort - stealing, corrupting, and destroying valuable data.

Additionally, the CVSS - which CISA directs most federal departments and agencies to use - has many inherent flaws, making it a poor choice for prioritization efforts. Most pronounced is the nature of the formula through which scores are generated.13 The 10-point scale of the system suggests that, among other things, a vulnerability can be ten times as severe as another one. Unfortunately experience has shown this to be far from the case, as there are thousands of vulnerabilities that are never exploited “in the wild” while a few dozen or hundred issues have led to the majority of all cyber attacks attributable to a known software vulnerability. Partially as a result, the organization that developed the standard, the Forum of Incident Response and Security Teams (FIRST), itself advises not using CVSS alone for measuring risk.14

A secondary problem with CVSS is that scanning tools generally report scores without context due to technical limitations.15 Due to the fact that many simply determine if a component with a known vulnerability is present or whether the first step of an attack could occur, they create a vast quantity of “chaff.” While percentages vary, it would appear that only a very small number of all known vulnerabilities actually can be exploited for a given software application or network, with 10% being a conservative estimate.16

Recent CISA guidance will require FCEB organizations to conduct continuous, automated vulnerability enumeration of their entire network.17 While identifying known vulnerabilities in federal networks is absolutely vital to managing them, prioritizing them will become even more important as departments and agencies identify a new flood of new findings.

Defining risk

Exacerbating the problem, there is substantial difference of opinion and practice in both the public and private sectors as to the meaning of the word “risk.” Practitioners commonly refer to individual vulnerabilities as being high or low risk in general terms.18 Unfortunately, in addition to using qualitative terminology, which is easy to misinterpret and is highly subjective, it is impossible for such general categorizations to take into account either the likelihood of exploitation or severity of impact should such an event occur.19 Without understanding the specifics for each attribute, it is difficult to describe the risk a given vulnerability poses.

The range of outcomes following successful exploitation of a given vulnerability range from trivial to lethal, and considering this fact when measuring risk is essential. While the OPM hack demonstrated the ability of real-world attackers to cause significant damage, it is quite conceivable that they could cause physical harm with the right vulnerability. For example, from 2012 to 2017, DoD weapons testers routinely found vulnerabilities in weapon systems under development that, by using simple techniques, allowed them to take control of these systems and often operate undetected.20 Even more recently, testers identified unspecified but presumably exploitable cybersecurity vulnerabilities in the F-35 aircraft’s onboard systems.21

Hostile exploitation of a vulnerability in one of these situations would have far more catastrophic consequences than exploitation of the same one in, for example, dining facility software, and risk assessments must take this into account.22 The below equation represents the most basic way to do so, and is what I will use to define the risk posed by software vulnerabilities:

Risk = Likelihood of Exploitation x Severity of Impact

Quantifying these two factors - likelihood and probability - are thus the most important task for the federal government when evaluating the risk of a given software vulnerability. Few of the publicly-identified federal systems used for doing so operate this way, and none of them do so in a quantitative manner.

Remediation challenges

Describing risk in absolute terms - dollars, for example - versus relative ones - such as “high” or “low” - is an essential part of any program designed to manage it. As one OMB memorandum recognizes, cyber risk represents only one type of risk facing federal agencies.23 Although vast, the federal government’s resources are finite, and applying them in the most cost-effective manner requires knowing not only the expense associated with mitigating risk, but also the cost of the risk itself.

While some might object to boiling down cyber risk calculations - which can potentially involve determining the number of human lives lost to an attack - to dollars, this is already common practice in almost every other aspect of public and private sector governance. The Department of Transportation, the Centers for Disease Control, and the Environmental Protection Agency all make such calculations when analyzing the risk/reward tradeoff of policy decisions.24 Furthermore, there are already well-developed techniques for calculating cyber risk in dollar terms, such as the Factor Analysis of Information Risk (FAIR) method.25

Even assuming that the government treated cyber risk in a vacuum and did not contemplate tradeoffs with other types of risk, it would face substantial constraints. Research has shown that, on average, organizations generally can only fix 5-20% of the vulnerabilities in their network per month.26 It appears that this number represents more-or-less a natural “limit” in terms of ability to remediate such issues. This is even further evidence that prioritizing the highest risk items is a key federal task.

Unfortunately, it does not appear the government has a consolidated program or philosophy for addressing the risk posed by the vast quantity of known vulnerabilities in its networks. In fact, various agencies use - or have directed others to use - a variety of different approaches. Some organizations, namely CISA, even simultaneously recommend or mandate methods of prioritization that are explicitly critical of each other.27

A more consolidated approach toward this problem is thus necessary to ensure the most efficient and effective allocation of national resources.

Unknown vulnerabilities

Finally, an entirely separate category of vulnerabilities not detectable by most scanning tools poses an equivalent threat. According to industry research, it appears that slightly more than half of all vulnerabilities are exploited prior to becoming known publicly and the vendor making a patch available to resolve them.28 These previously unknown vulnerabilities thus create additional potential risk for federal departments and agencies.

For example, in late 2020, hackers working on behalf of or as part of the Russian foreign intelligence service breached multiple United States government agency networks after establishing a foothold in the networks of federal contractor SolarWinds.29 This incident appears to have primarily involved the exploitation of vulnerabilities unknown to anyone (except the attackers) at the time.30

Although such unknown vulnerabilities are generally outside the scope of this paper, understanding the relative risk posed by them versus known issues is important for making rational resource allocation decisions.

The government approach

As of April 2021, the federal government spent more than $100 billion on information technology and cyber-related investments.31 At least one version of the Fiscal Year (FY) 2023 National Defense Authorization Act (NDAA) allocated more than $1.5 billion dollars for DoD alone to conduct assessments and evaluations of cybersecurity vulnerabilities.32 Thus it seems fair to estimate total federal government expenditures on the management of software vulnerabilities to be in the billions of dollars per year.

A lack of internally consistent high-level guidance on this topic, however, has resulted in a mishmash of different frameworks, policies, and tools being applied. There is a wide array of interconnecting statutes, directives, and programs that control the remediation of known vulnerabilities. There is also a vast array of government agency organizations that monitor software vulnerability information, but there is little evidence that they coordinate with each other.33

Broadly speaking, it is possible to split federal guidance into three main categories: government-wide mandates and recommendations, CISA directives, and Department of Defense (DoD) and Intelligence Community (IC) standards. I contacted all Cabinet departments, CISA, the OMB, and the ONCD with a series of detailed questions regarding their vulnerability prioritization practices, but received replies from none.34 Thus, the below information is based on the public record and interviews with industry experts with relevant expertise.

Government-wide

As one might expect, the higher in the governmental organization chart one goes, the more generic the guidance becomes. With that said, relatively prescriptive guidance regarding software vulnerability management has issued forth from the executive and legislative branches of late. Implementing it in a consistent way throughout the government, however, has proven elusive.

Congress

Some Members of Congress, have gone as far to identity specific vulnerabilities by name, such as the infamous “log4shell” (CVE-2021-44228) flaw that caused chaos in the public and private sectors in the winter of 2021-2022.35 A Senate hearing also followed its disclosure.36 Similarly, the Fiscal Year FY2022 National Defense Authorization Act included a fair number of provisions regarding security vulnerabilities. It dedicated several sections toward identifying, disclosing, and mitigating or preventing them.37

While Congress allocates a substantial sum of money to the general problem, it can find itself out of step with the software industry on occasion. A recent effort to introduce specific requirements regarding known vulnerabilities into the Department of Homeland Security (DHS) software procurement in the House version of the FY2023 NDAA, for example, received strong industry opposition.38

Executive Office of the President

On the executive branch side, there have been some notable actions related to vulnerability and cyber risk management. The most detailed to date is Executive Order (EO) 14028, issued in 2021, which includes - among other things - a section requiring the development of a standardized federal government playbook for responding to cybersecurity vulnerabilities (discussed below) as well as one on improving detection of them. Interestingly, the guidance exempts “National Security Systems,” thus excluding most of the DoD and IC from the requirements.39

EO 13800, issued in 2017, recognized that “[k]nown but unmitigated vulnerabilities are among the highest cybersecurity risks faced by executive departments and agencies.” It furthermore directed the use of NIST’s Framework for Improving Critical Infrastructure Cybersecurity for managing cybersecurity risk in certain federal agencies.40 This document - based on the existing NIST Cybersecurity Framework - mirrors the original guidance in saying little beyond that federal organizations should use existing threats, likelihoods of exploitation, and expected impacts to calculate risk.41 The CSF itself was the product of the 2013 EO 13636 that directed NIST to develop it.42

Presidential Policy Directive (PPD)-41, issued in 2016, establishes the concept of a “cyber incident” which “may include a vulnerability in an information system, system security procedures, internal controls, or implementation that could be exploited by a threat source.”43 The associated schema diagram provides a qualitative 0-5 scale for rating the severity of such cyber incidents from “inconsequential” to “emergency.”44

In 2015, EO 13691 encouraged the establishment of cybersecurity Information Sharing and Analysis Organizations (ISAO), which could help to disseminate software vulnerability-related data.45

NIST

At the direction of the White House and of its own accord, NIST has produced a voluminous amount of documentation regarding cybersecurity. Being a standards-focused organization, NIST has proposed a range of practices when it comes to vulnerability management, primarily through a set of special publications (SP). Foremost among these is SP 800-53, “Security and Privacy Controls for Information Systems and Organizations.” The document begins by acknowledging that

Realistic assessments of risk require a thorough understanding of the susceptibility to threats based on the specific vulnerabilities in information systems and organizations and the likelihood and potential adverse impacts of successful exploitations of such vulnerabilities by those threats.46

Unfortunately, aside from this, the document does little to explain exactly how to make such types of assessments. The most detailed guidance it provides suggests “requiring no known vulnerabilities in the delivered system with a Common Vulnerability Scoring System (CVSS) severity of medium or high” without describing how to calculate the risk posed by such issues (and confusingly omitting “critical” issues, the most severe according to the standard).47 NIST SP 800-53 also recommends prioritizing vulnerability remediation by “severity” without specifying what that means.48

SP 800-37, entitled “Risk Management Framework for Information Systems and Organizations” is another important NIST publication applicable to vulnerability management. Establishing a cyber risk management system that is mandatory for government agencies, it recommends several important concepts, such as identifying an organizational risk tolerance.49

Unfortunately, the SP 800-37 does not explain how to calculate such a value (or any other risk rating). Furthermore, it recommends describing the impact level of systems using a complex and confusing set of qualitative terms (such as “low-high” and “moderate-moderate”).50 In addition to preventing any sort of unambiguous comparison between systems, substantial research has shown that such qualitative ratings are ineffective while at the same time giving analysts a false sense of certainty in their results.51

CISA requirements

Given its applicability to the majority of federal government information systems, CISA guidance is necessarily important to review when examining federal government vulnerability management practices. Unfortunately, the agency has published several contradictory statements that suggest a lack of internal clarity regarding how best to prioritize known flaws.

The agency’s “Cyber Hygiene” program leverages CVSS, describing it as a way to “prioritize vulnerability management strategies.”52 Per a Binding Operational Directive (BOD) released in 2019, CVSS “Critical vulnerabilities must be remediated within 15 calendar days of initial detection,” and “High” ones must be remediated within 30.”53

In 2021, however, and in response to EO 14028, CISA released a set of “Cybersecurity Incident & Vulnerability Response Playbooks” that made a different recommendation. While generally avoiding specifics about exact prioritization methodologies, it interestingly suggests using the Stakeholder Specific Vulnerability Categorization (SSVC).54 Reversing the letter order of the CVSS acronym, of which the SSVC is explicitly critical, the latter method uses a series of decision trees to determine the rapidity of remediation appropriate for a given vulnerability based on qualitative inputs.55

In another BOD issued at roughly the same time as the playbooks, CISA revised its guidance from 2019. Most importantly, this BOD required agencies to prioritize the remediation of Known Exploited Vulnerabilities (KEV) above all others. Interestingly, the agency noted that ‘attackers do not rely only on “critical” vulnerabilities to achieve their goals; some of the most widespread and devastating attacks have included multiple vulnerabilities rated “high”, “medium”, or even “low.”’56 This has been quite clear since at least 2014, where the “Heartbleed” vulnerability (CVE-2014-0160) caused at least 840 identified breaches despite originally being ranked as a 5.0/10.0 on the CVSS scale (a “medium” severity issue).57

Despite its apparent refutation of CVSS as an effective scoring tool, CISA noted in the same BOD that “CVSS scoring can still be a part of an organization’s vulnerability management efforts” and warned that the directive does “does not release [agencies] from any of their compliance obligations, including the resolution of other vulnerabilities.”58

In addition to the CVSS, SSVC, and the KEV, CISA occasionally issues vulnerability-specific guidance specifying timelines for remediation. In response to the disclosure of log4shell, for example, CISA directed FCEB agencies to address this and related vulnerabilities within two weeks.”59

Thus, it appears that CISA recommends or requires four different methodologies for vulnerability prioritization.

DoD/IC requirements

The DoD and intelligence communities operate differently than FCEB agencies. At a high level, Office of the Director of National Intelligence (ODNI) guidance from 2019 suggests that its members incorporate “existing mission assurance factors (e.g., threat impact severity, exploitability, and IC element exposure) into the prioritization of vulnerability mitigation and mission capability delivery.”60 This resembles NIST and other guidance applicable to the FCEB, but the DoD and IC use different methods for implementing it.

Functionally, these organizations follow a program called Information Assurance Vulnerability Management (IAVM).61 The IAVM program evaluates all known vulnerabilities and distributes remediation guidance in the form of Information Assurance Vulnerability Alerts (IAVA) and Information Assurance Vulnerability Bulletins (IAVB).62

Issues identified via IAVAs represent the highest priority vulnerabilities to remediate, with zero outstanding IAVA-flagged vulnerabilities often being a de facto requirement to achieve Authority to Operate (ATO) for intelligence community systems.63 Those highlighted in IAVBs represent the second highest priority vulnerability for remediation, but anecdotal evidence suggests these are sometimes ignored throughout the DoD and IC.64

Additionally, vulnerability management programs rely heavily on the use of commercial tools developed by the company Tenable, called the Assured Compliance Assessment Solution (ACAS).65 Experts with experience in DoD and IC elements suggest that such organizations would address the issue by first remediating IAVAs, then “critical” ACAS findings, then “high” ACAS findings.66

Finally, the aforementioned ODNI guidance identifies increasing “Common Vulnerability Enumeration remediation rate to 95% or above” as an organizational goal.67 This implies a more broad-brush effort to simply reduce the raw number of identified CVEs rather than targeting the ones creating the great risk or even the ones with the highest likelihood of exploitation.

Similarly to what CISA recommends, it appears the DoD and IC use a range of different metrics and rubrics for vulnerability prioritization, albeit ones that are entirely separate from FCEB organizations. Thus, the already bifurcated system for vulnerability management across government is equally complex in both groups of organizations, but in a way such that the two systems are substantially different from each other.

Recommendations

Analyzing available information regarding federal government vulnerability prioritization methods reveals at least eight nine (Updated 26 May 2023) different such systems different systems ostensibly in use.68 While it may certainly be the case that different organizations have different risk appetites, it does not follow that the method for measuring this risk should vary so widely.69

Methods of vulnerability prioritization in federal government use

If the federal government is to make sound decisions regarding the appropriate resource allocations related to cyber risk management, it logically follows that the government should be able to describe this risk in a standardized manner across all organizations. To address this reality:

The President should direct NIST to develop a quantitative standard for measuring the cybersecurity risk posed by known software vulnerabilities in a consistent manner. NIST should also revise SP 800-37 to mandate the use of this standard. National Security Systems and the DoD and IC should not be exempt from the requirement. This order should also mandate that all official reporting and communication from federal agencies use this standard. I offer up the Deploy Securely Risk Assessment Model (DSRAM) as an example of how to make such quantitative assessments.70 As part of this standard, NIST should develop recommendations for methods to analyze both the quantitative likelihood of exploitation and severity of impact that would result from such an event.

The Exploit Prediction Scoring System (EPSS), also published by FIRST, is one such example of the former.71 A freely-available data set, it uses a machine learning model to predict the probability of exploitation of every published CVE. Although not without its critics, it certainly represents a coherent input to cyber risk management efforts.72 CISA should consider funding the EPSS directly and making its underlying data and source code freely available as a condition of doing so.

CISA should consider sharing the underlying data from its KEV database with that used by the EPSS project. Currently the former is relatively limited in that it omits dates and frequencies of exploitations.73 Furthermore, the number of issues identified - 834 at the time of this writing - is relatively small, considering that research has identified more than 4,000 vulnerabilities exploited between 2009 and 2019.74 Additional information regarding real-world exploitation events could help to refine the accuracy of likelihood calculations, using the EPSS or some other system.

All government organizations should conduct a thorough, continuous, and automated asset inventory - to identify - and a business impact analysis - to value - their entire suite of information systems. Recent OMB guidance requires this to a degree, but understanding the potential impact of an exploited vulnerability is not possible without such a comprehensive view.75 CISA’s recently released BOD 23-01, which requires all FCEB organizations to conduct automated asset discovery is also a step in the right direction, and the output of these efforts should directly feed analysis efforts.76 Using a revamped SP 800-37, all government organizations should then develop detailed estimates of the potential impacts to their information systems in quantitative terms.

CISA or NIST should conduct a formal study on the frequency of malicious exploitations of federal networks to determine the relative preponderance of known versus unknown vulnerabilities used in attacks. Based on the aforementioned research, it may be the case that the bigger threat for government systems is posed by “zero-day” vulnerabilities, rather than known ones. Armed with this information, as well as a coherent system for valuing the risk posed by known issues, CISA could potentially recommend shifting resources toward identifying unknown issues. Conversely, it might become clear that currently identified flaws are the bigger threat and thus deserve increased resource allocation.

Conclusion

Given the explosive growth in certain types of cyberattacks - especially ransomware - along with the semi-periodic revelations of catastrophic breaches suffered by the federal government, the status quo leaves much to be desired.77 The government likely spends billions of dollars attempting to manage the risk from known vulnerabilities in its information system, but still suffers attacks that some observers value as inflicting the better part of 100 billion dollars of damage.78

The nation is either not investing in the right things, not investing enough, or some combination of the two. The only way to determine the case for known vulnerabilities in federal information systems is to conduct a risk-based quantitative analysis. With clearer, more actionable information in hand, policy makers and implementers can make more informed decisions when allocating limited national resources.

Acknowledgments

For their feedback and assistance, I would like to thank Stephen Carter, Chris Hughes, Jonathan Todd, Russ Andersson, Tony Turner, and those who assisted in this project while desiring to remain anonymous.

Disclosures

My company, StackAware, is a business partner of RapidFort (Russ Anderson’s employer) and has had early business discussions regarding potential partnerships with Nucleus Security (Stephen Carter’s employer) and Tenable.

Shodan.io, accessed September 7, 2022. CVEs are the most common type of known vulnerability and every one recorded is publicly available in the National Institute of Standards and Technology’s (NIST) National Vulnerability Database (NVD). NIST NVD, “Vulnerabilities,” accessed September 28, 2022, https://nvd.nist.gov/vuln.

Shodan.io, “128.160.11.24,” accessed September 7, 2022, https://www.shodan.io/host/128.160.11.24. NIST NVD, “CVE-2019-0215,” https://nvd.nist.gov/vuln/detail/CVE-2019-0215, NIST NVD, “CVE-2022-22720,” https://nvd.nist.gov/vuln/detail/CVE-2022-22720. The CVSS translates numerical ratings from 0.0-10.0 into qualitative ratings from “None” to “Critical.” Forum of Incident Response and Security Teams, “Common Vulnerability Scoring System version 3.1: Specification Document,” access September 16, 2022, https://www.first.org/cvss/specification-document.

The credit rating company Equifax suffered a major data breach in 2017 following the malicious exploitation of CVE-2017-5638, which was identified publicly two months beforehand. “Equifax, Apache Struts, and CVE-2017-5638 vulnerability,” Synopsys, September 15, 2017, https://www.synopsys.com/blogs/software-security/equifax-apache-struts-vulnerability-cve-2017-5638/. During the hack of the Office of Personnel and Management (OPM) in 2014 and 2015, the attackers deployed PlugX malware, which exploited a previously discovered vulnerability (CVE-2012-0158). Knowledge Now, “PLUGX,” accessed September 20, 2022, https://know.netenrich.com/threatintel/malware/PlugX. Josh Fruhlinger, “The OPM hack explained: Bad security practices meet China's Captain America,” CSO, February 12, 2020, https://www.csoonline.com/article/3318238/the-opm-hack-explained-bad-security-practices-meet-chinas-captain-america.html.

This paper will define a known software vulnerabilities (abbreviated as simply “vulnerability” unless otherwise specified) as a flaw in the design or implementation of a computer program that would allow an attacker to compromise the intended confidentiality, integrity, or availability of data contained therein.

Google Doc comment from Chris Hughes, September 29, 2022.

Cybersecurity and Infrastructure Security Agency (CISA), “FEDERAL CIVILIAN EXECUTIVE BRANCH AGENCIES LIST,” accessed September 26, 2022, https://www.cisa.gov/agencies.

The White House, “A Strategic Intent Statement For the Office Of the National Cyber Director,” October, 2021, https://www.whitehouse.gov/wp-content/uploads/2021/10/ONCD-Strategic-Intent.pdf. The only instance I could find regarding the ONCD’s position on vulnerability management was when Christopher DeRusha noted in mid-2022 that “[n]o longer will we be forced to relearn the same lessons from every attack, but instead we will systematically store and keep system logs to learn from past vulnerabilities and train the next generation of system defenders.” Testimony of Christopher J. DeRusha, Deputy National Cyber Director for Federal Cybersecurity and Federal Chief Information Security Officer before the United States House of Representatives Committee on Homeland Security Subcommittee on Cybersecurity, Infrastructure Protection, & Innovation, “Securing the DotGov: Examining Efforts to Strengthen Federal Network Cybersecurity,” May 17, 2022, https://docs.house.gov/meetings/HM/HM08/20220517/114763/HHRG-117-HM08-Wstate-DeRushaC-20220517.pdf.

Office of Management and Budget (OMB) Circular No. A-130, “Managing Information as a Strategic Resource,” revised July 28, 2016, https://www.cio.gov/policies-and-priorities/circular-a-130/, OMB Memorandum M-22-18, “Enhancing the Security of the Software Supply Chain through Secure Software

Development Practices,” September 14, 2022, https://www.whitehouse.gov/wp-content/uploads/2022/09/M-22-18.pdf.

“The Fast and the Frivolous: Pacing Remediation of Internet-Facing Vulnerabilities,” Security ScoreCard and the Cyentia Institute, June 6, 2022, https://securityscorecard.pathfactory.com/risk-intelligence/cyentia-fast-and-frivolous, p. 4.

Christian Hyatt, LinkedIn post, September 21, 2022, https://www.linkedin.com/posts/christianhyatt_security-vulnerabilities-vulnerabilitymanagement-activity-6977600004400046080-6BV8. Brook S.E. Schoenfield, “Mismatch? CVSS, Vulnerability Management, and Organizational Risk,” IOActive, April 13, 2020, https://ioactive.com/cvss-vulnerability-management-and-organizational-risk/.

NIST NVD, “Dashboard,” accessed 20 September 2022, https://nvd.nist.gov/general/nvd-dashboard.

For the purposes of this paper, “vulnerability scanning tools” refer primarily to dynamic application security testing (DAST) tools. While static analysis security testing (SAST), software composition analysis (SCA) and other types of scanners also detect vulnerabilities, they generally see the most use by software developers rather than consumers, with the federal government being mostly the latter.

Henry Howland, “CVSS: Ubiquitous and Broken,” Drew University, February 15, 2022, https://dl.acm.org/doi/pdf/10.1145/3491263.

FIRST, “Common Vulnerability Scoring System version 3.1: User Guide”, Section 2.1, June 2019, https://www.first.org/cvss/user-guide.

Breachlock, “Can Results from DAST (Dynamic Application Security Testing) be false positive?,” June, 22, 2019, https://www.breachlock.com/can-results-from-dast-dynamic-application-security-testing-be-false-positive/. Dan Goldberg, “The False Positives Problem with CVE Detection,” Azul, August 25, 2022, https://www.azul.com/blog/the-false-positives-problem-with-cve-detection/.

Ericka Chickowski, “Only 3% of Open Source Software Bugs Are Actually Attackable, Researchers Say,” Dark Reading, June 24, 2022, https://www.darkreading.com/application-security/open-source-software-bugs--attackability. FIRST Exploit Prediction Scoring System (EPSS), “The EPSS Model,” accessed September 27, 2022, https://www.first.org/epss/model. CISA BOD 22-01, “Reducing the Significant Risk of Known Exploited Vulnerabilities, November 3, 2021, https://www.cisa.gov/binding-operational-directive-22-01.

CISA Binding Operational Directive (BOD) 23-01, October 3, 2022, https://www.cisa.gov/binding-operational-directive-23-01.

University of California, San Francisco, “High-risk Vulnerability within IBM Maximo Asset Management,” accessed September 23, 2022, https://it.ucsf.edu/high-risk-vulnerability-within-ibm-maximo-asset-management. CISA, “Top 30 Targeted High Risk Vulnerabilities,” released April 29, 2015 and revised September 29, 2016, https://www.cisa.gov/uscert/ncas/alerts/TA15-119A. Jerry Gamblin, “8 Types of High-Risk Cybersecurity Vulnerabilities,” February 25, 2021, Kenna Security, https://www.kennasecurity.com/blog/8-types-of-high-risk-cybersecurity-vulnerabilities/.

Douglas W. Hubbard and Richard Seiersen, How to Measure Anything in Cybersecurity Risk (John Wiley & Sons, Hoken, NJ: 2016), 13-15. Google Doc comment from Chris Hughes, September 29, 2022.

Government Accountability Office (GAO), “GAO-19-128: Weapon System Cybersecurity: DOD Just Beginning to Grapple with Scale of Vulnerabilities,” https://www.gao.gov/assets/gao-19-128.pdf, p. 21.

Anthony Capaccio, “Lockheed’s F-35s Get a Flawed $14 Billion Software Upgrade,” Bloomberg, Jan. 26, 2022, https://www.bloomberg.com/news/articles/2022-01-26/f-35-fighter-jet-s--14-billion-software-upgrade-is-deployed-despite-flaws.

“Campus Dining System (CDS) set to arrive at Maxwell Air Force Base 1 Aug 21,” 42d Force Support Squadron, July 27, 2021, https://www.maxwell.af.mil/News/Display/Article/2709409/campus-dining-system-cds-set-to-arrive-at-maxwell-air-force-base-1-aug-21/.

OMB Memorandum M-17-25, “Reporting Guidance for Executive Order on Strengthening the Cybersecurity of Federal Networks and Critical Infrastructure”, May 19, 2017, https://www.whitehouse.gov/wp-content/uploads/legacy_drupal_files/omb/memoranda/2017/M-17-25.pdf.

Sarah Gonzalez, “How Government Agencies Determine The Dollar Value Of Human Life,” NPR, April 23, 2020, https://www.npr.org/2020/04/23/843310123/how-government-agencies-determine-the-dollar-value-of-human-life.

Daniel Stone and Tyler Ross, “Cyber Risk Management: Establishing a Blueprint with FAIR,” FAIR Institute, https://www.fairinstitute.org/blog/cyber-risk-management-establishing-a-blueprint-with-fair.

“The Fast and the Frivolous,” p. 19. “The EPSS Model.”

I specifically addressed this apparent contradiction in my inquiry with CISA described above, but received no response so clarification was not forthcoming.

Kathleen Metrick, Jared Semrau, and Shambavi Sadayappan, “Think Fast: Time Between Disclosure, Patch Release and Vulnerability Exploitation — Intelligence for Vulnerability Management, Part Two,” Mandiant, April 13, 2020, https://www.mandiant.com/resources/blog/time-between-disclosure-patch-release-and-vulnerability-exploitation.

The White House, “FACT SHEET: Imposing Costs for Harmful Foreign Activities by the Russian Government,” April 15, 2021, https://www.whitehouse.gov/briefing-room/statements-releases/2021/04/15/fact-sheet-imposing-costs-for-harmful-foreign-activities-by-the-russian-government/.

Unit 42, “SolarStorm Supply Chain Attack Timeline,” Palo Alto Networks, December 23, 2020, https://unit42.paloaltonetworks.com/solarstorm-supply-chain-attack-timeline/. Sivathmican Sivakumaran, “Three Bugs in Orion’s Belt: Chaining Multiple Bugs for Unauthenticated RCE In the Solarwinds Orion Platform,” Zero Day Initiative, January 21, 2021, https://www.zerodayinitiative.com/blog/2021/1/20/three-bugs-in-orions-belt-chaining-multiple-bugs-for-unauthenticated-rce-in-the-solarwinds-orion-platform. Although the initial attack vector does not appear to have used known-at-the-time vulnerabilities, the hackers did use several known ones subsequently to the initial compromise. Jenny Anderson and Inno Eroraha, “SolarWinds Orion Vulnerability (CVE-2020-10148) Explained,” netSecurity, July 13, 2022, https://blog.netsecurity.com/solarwinds-orion-vulnerability-explained/.

GAO-22-106054, “TECHNOLOGY MODERNIZATION FUND: Past Awards Highlight Need for Continued Scrutiny of Agency Proposals,” May 25, 2022, p. 2, https://www.gao.gov/assets/gao-22-106054.pdf.

H.R. 7900 - FY23 National Defense Authorization Bill, Chairman’s Mark, Section 4201, https://docs.house.gov/meetings/AS/AS00/20220622/114818/BILLS-117HR7900ih.pdf.

GAO-20-629, “Cybersecurity: Clarity of Leadership Urgently Needed to Fully Implement the National Strategy,” September 22, 2020, https://www.gao.gov/products/gao-20-629, (p. 19, 21-23, 43-45, 48).

Emails to OMB, ONCD, Department of Commerce, Agriculture, Defense, Education, Energy, Health and Human Services, Homeland Security, Housing and Urban Development, Interior, Labor, State, Transportation, Treasury, and Veterans Affairs press offices on September 12, 2022. Submission of press inquiry form to Department of Justice, September 12, 2022. Email to Office of the Director of National Intelligence (ODNI) press office, September 19, 2022. Email to CISA, September 29, 2022.

Opening Statement of Chairwoman Haley Stevens, Subcommittee on Research and Technology, Joint Subcommittee Investigations and Oversight & Research and Technology, Committee on Science, Space, and Technology, United States House of Representatives, “Securing the Digital Commons: Improving the Health of the Open-Source Software Ecosystem,” May 11, 2022, https://docs.house.gov/meetings/SY/SY21/20220511/114727/HHRG-117-SY21-MState-S001215-20220511.pdf. Ranking Member John Katko, Committee on Homeland Security, United States House of Representatives, “Katko on Russia’s Latest Attempt to Target Our Nation’s Infrastructure,” January 12, 2022, https://republicans-homeland.house.gov/katko-on-russias-latest-attempt-to-target-our-nations-infrastructure/.

Opening Statement by David Nalley, “Responding to and Learning from the Log4Shell Vulnerability,” Committee on Homeland Security and Government Affairs, United States Senate, February 8, 2022, https://www.hsgac.senate.gov/imo/media/doc/Testimony-Nalley-2022-02-08.pdf.

S.1605 - National Defense Authorization Act for Fiscal Year 2022, Sections 1542-1544, https://www.congress.gov/bill/117th-congress/senate-bill/1605/text.

The most objectionable requirement - from the private sector perspective - was that suppliers provide a “certification that each item listed on the submitted bill of materials is free from all known vulnerabilities or defects affecting the security of the end product or service.” Letter from the Alliance for Digital Innovation, BSA | The Software Alliance, the Cybersecurity Coalition, and the Information Technology Industry Council, September 14, 2022, https://alliance4digitalinnovation.org/wp-content/uploads/2022/09/Multi-association-letter-on-SBOM-final-9.14.2022.pdf.

The White House, EO 14028, “Executive Order on Improving the Nation’s Cybersecurity,” May 12, 2021, https://www.whitehouse.gov/briefing-room/presidential-actions/2021/05/12/executive-order-on-improving-the-nations-cybersecurity/, section 6 and 7.

Executive Order 13800—Strengthening the Cybersecurity of Federal Networks and Critical Infrastructure, May 11, 2017, https://www.govinfo.gov/content/pkg/DCPD-201700327/pdf/DCPD-201700327.pdf.

NIST Cybersecurity Framework, version 1.1, April 16, 2018, https://nvlpubs.nist.gov/nistpubs/CSWP/NIST.CSWP.04162018.pdf, ID.RA-5.

Executive Order 13636 - Improving Critical Infrastructure Cybersecurity, February 12, 2013, https://www.federalregister.gov/documents/2013/02/19/2013-03915/improving-critical-infrastructure-cybersecurity.

Presidential Policy Directive 41 - United States Cyber Incident Coordination, July 26, 2016, https://obamawhitehouse.archives.gov/the-press-office/2016/07/26/presidential-policy-directive-united-states-cyber-incident.

PPD-41 Cyber Incident Severity Schema, https://obamawhitehouse.archives.gov/sites/whitehouse.gov/files/documents/Cyber%2BIncident%2BSeverity%2BSchema.pdf.

Executive Order 13691 - Promoting Private Sector Cybersecurity Information Sharing, February 13, 2015, https://www.federalregister.gov/documents/2015/02/20/2015-03714/promoting-private-sector-cybersecurity-information-sharing.

NIST SP 800-53, rev. 5, “Security and Privacy Controls for Information Systems and Organizations,” September 2020, page 4, https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-53r5.pdf.

NIST SP 800-53, p. 281-282.

NIST SP 800-53, p. 281.

NIST, “SP 800-37 Rev. 2: Risk Management Framework for Information Systems and Organizations: A System Life Cycle Approach for Security and Privacy,” December 2018, https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-37r2.pdf, p, 19-20.

NIST SP 800-37, p. 34.

Hubbard and Seiersen, p. 15-16.

CISA BOD 19-02, April 29, 2019, https://www.cisa.gov/sites/default/files/bod-19-02.pdf.

CISA BOD 19-02.

CISA, “Cybersecurity Incident & Vulnerability Response Playbooks: Operational Procedures for Planning and Conducting Cybersecurity Incident and Vulnerability Response Activities in FCEB Information Systems”, November 2021, https://www.cisa.gov/sites/default/files/publications/Federal_Government_Cybersecurity_Incident_and_Vulnerability_Response_Playbooks_508C.pdf, p. 23.

Jonathan Spring, Allen D. Householder, Eric Hatleback, Art Manion, Madison Oliver, Vijay S. Sarvepalli, Laurie Tyzenhaus, and Charles G. Yarbrough, “Prioritizing Vulnerability Response: A Stakeholder-Specific Vulnerability Categorization (Version 2.0),” Carnegie Mellon University Software Engineering Institute, April 2021, https://resources.sei.cmu.edu/library/asset-view.cfm?assetid=653459.

CISA BOD 22-01, November 3, 2021, https://www.cisa.gov/binding-operational-directive-22-01.

Michael Roytman, “CVSS Score: A Heartbleed By Any Other Name,” AT&T Cybersecurity, May 20, 2014, https://cybersecurity.att.com/blogs/security-essentials/cvss-score-a-heartbleed-by-any-other-name

CISA BOD 22-01.

Cyber Safety Review Board, “Review of the December 2021 Log4j Event,” July 11, 2022, p. 6, https://www.cisa.gov/sites/default/files/publications/CSRB-Report-on-Log4-July-11-2022_508.pdf.

ODNI, “Improving Cybersecurity for the Intelligence Community Information Environment Implementation Plan,” August 2019, https://www.dni.gov/files/documents/CIO/Improving_Cybersecurity-IC_IE_ImpPlan-August_2019_reduced_web.pdf, p. 47.

Army LandWarNet NetOps Architecture, "Information Assurance Vulnerability Management", accessed September 21, 2022, https://netcom.army.mil/vendor/lnaDocs/INFORMATION%20ASSURANCE%20VULNERABILITY%20MANAGEMENT%20(IAVM).doc. Department of Defense Inspector General, “DoD Compliance with the Information Assurance Vulnerability Alert Policy,” December 1, 2000, https://media.defense.gov/2000/Dec/01/2001713971/-1/-1/1/01-013.pdf. LinkedIn conversation with Stephen Carter, Chief Executive Officer of Nucleus Security, September 20, 2022.

Carter, September 20, 2022. NIST Computer Security Resource Center, “information assurance vulnerability alert (IAVA)”, accessed September 22, 2022, https://csrc.nist.gov/glossary/term/information_assurance_vulnerability_alert. NIST Computer Security Resource Center, “information assurance vulnerability bulletin (IAVB)”, accessed September 22, 2022, https://csrc.nist.gov/glossary/term/information_assurance_vulnerability_bulletin.

Carter, September 20, 2022.

Carter, September 20, 2022.

Tenable, “Assured Compliance Assessment Solution (ACAS), Powered by Tenable,” access September 20, 2022, https://www.tenable.com/sites/default/files/uploads/documents/whitepapers/Whitepaper-Assured_Compliance_Assessment_Solution_ACAS_Powered_by_Tenable.pdf. Email from cybersecurity expert with experience in federal government vulnerability management practices, September 19, 2022.

Tenable, ACAS. Carter, September 20, 2022. Anecdotal reports from a practitioner in the field (who works for a competitor of Tenable) suggests that less than 1% of known vulnerabilities have an available quantitative priority score using the commercial equivalent of the tool. LinkedIn comment from Tony Turner, Vice President, Fortress Information Security, August 31, 2022, https://www.linkedin.com/feed/update/urn:li:activity:6970362119871483904?commentUrn=urn%3Ali%3Acomment%3A%28activity%3A6970362119871483904%2C6970489178656567297%29.

ODNI, “Improving Cybersecurity,” p. 17.

The DoD Platform One’s Overall Risk Assessment (ORA) methodology could conceivably be considered a ninth, as it proposes a method for managing the risk from vulnerabilities in software container images. Since it is built on top of the CVSS however, I chose not to include it as a separate methodology. LinkedIn post by Russ Anderson, October 3, 2022, https://www.linkedin.com/posts/activity-6982712644130504704-dFm6. DoD Platform One, “Overall Risk Assessment (Formerly Risk Radiator),” May 4, 2022, https://repo1.dso.mil/dsop/dccscr/-/blob/master/ABC/ORA%20Documentation/Iron_Bank_-_Overall_Risk_Assessment__Formerly_Risk_Radiator_.pdf. In May of 2023, I discovered that CISA was also recommending (through its Cyber Hygiene program) the use of the a “Risk Rating System” (RRS) which built on CVSS by assigning additional arbitrary values to each score. See this guide for details.

See this article for my definition of risk appetite: Walter Haydock, “Managing your Risk Surface,” Deploying Securely, June 24, 2022, https://www.blog.deploy-securely.com/p/managing-your-risk-surface.

Haydock, “The Deploy Securely risk assessment model - version 0.3,” March 21, 2022, https://www.blog.deploy-securely.com/p/the-deploying-securely-risk-assessment.

FIRST, “The EPSS Model.”

Jonathan Spring, “Probably Don’t Rely on EPSS Yet,” June 6, 2022, Carnegie Mellon University Software Engineering Institute, https://insights.sei.cmu.edu/blog/probably-dont-rely-on-epss-yet/.

CISA Known Exploited Vulnerabilities Catalog, accessed September 28, 2022, https://www.cisa.gov/known-exploited-vulnerabilities-catalog.

Catalin Cimpanu, “Only 5.5% of all vulnerabilities are ever exploited in the wild,” ZDNet, June 4, 2019, https://www.zdnet.com/article/only-5-5-of-all-vulnerabilities-are-ever-exploited-in-the-wild/

OMB Memorandum M-22-18.

CISA, BOD 23-01, October 3, 2022, https://www.cisa.gov/binding-operational-directive-23-01.

Security Magazine, “Ransomware attacks nearly doubled in 2021,” February 28, 2022, https://www.securitymagazine.com/articles/97166-ransomware-attacks-nearly-doubled-in-2021.

I acknowledge that this estimate includes private sector recovery costs. But since the primary target of the SolarWinds incident appears to have been federal networks, it seems fair to allocate the majority of the recovery costs to the government. Gopal Ratnam, “Cleaning up SolarWinds hack may cost as much as $100 billion,” Roll Call, January 11, 2021, https://rollcall.com/2021/01/11/cleaning-up-solarwinds-hack-may-cost-as-much-as-100-billion/.

Found your article both interesting and informative, vulnerability analysis and mitigation as well as mundane tasks of maintenance have to be a priority. It is about capacity largely and that means standards need to be not only adopted but practiced daily.