Get a Microsoft 365 Copilot security teardown without hours of documentation review

Deploying enterprise AI securely.

TL;DR

Microsoft 365 Copilot offers more granular data residency and retention controls than competitive offerings like ChatGPT Team. This might make it the right choice for enterprises already using the Microsoft 365 ecosystem.

Copilot only can access organizational data to which its user has at least view access. While it doesn’t override any existing permission boundaries, the ability to query vast amounts of data might make it easier for insider threats to identify valuable data to which they have access (by design or otherwise). According to one study by Varonis, 10% of all Microsoft 365 folders were exposed to the entire organization.

This granularity can also be a double-edged sword. For example, Copilot’s retention policy is not standalone; it follows existing settings that you have established that may override your Copilot-specific settings. Additionally, labelling of Copilot-generated material is not perfect and may lead to accidental violations of your data classification policy.

Using Copilot to browse the web introduces a further wrinkle in terms of data retention. It may be the case that snippets of confidential information are retained definitely due to the Bing API’s separate terms and conditions.

Enterprises fear unmanaged AI use, and Copilot is clearly targeting this

Last fall, Microsoft launched an AI-powered Copilot for its Microsoft 365 suite of tools (including Word, Excel, PowerPoint, etc.), which I’ll refer to as “Copilot” for the rest of this post unless otherwise noted. By integrating with existing internal data sets relatively easily, Microsoft took the relatively obvious step of making retrieval-augmented generation (RAG) using Large Language Models (LLM) accessible to average business users.

With the launch of Microsoft Copilot Studio shortly thereafter, you can also build custom GPTs in Microsoft 365 like you would with OpenAI’s products. With a $30/user/month cost (with an annual commitment), it’s priced similarly to ChatGPT Team. You’ll also need Microsoft 365 Business Standard or Premium to buy it, though, representing an additional prerequisite.

In this post, I’ll look at the highlights of Microsoft 365 from a security and privacy perspective.

Copilot data retention policies are granular…and complex

Copilot stores:

Prompts

Responses

“information used to ground Copilot’s” response

The latter to me means essentially the data used as part of Copilot’s RAG process.

According to the retention documentation:

Data from Copilot messages is stored in a hidden folder in the mailbox of the user who runs Copilot. This hidden folder isn’t designed to be directly accessible to users or administrators, but instead, store data that compliance administrators can search with eDiscovery tools.

This is an important fact to know because merely because something isn’t visible to an individual user doesn’t mean that it is deleted forever. Microsoft also provides some pretty serious detail regarding the mechanics of retention and deletion:

After a retention policy is configured for Microsoft Copilot for Microsoft 365 interactions, a timer job from the Exchange service periodically evaluates items in the hidden mailbox folder where these messages are stored. The timer job typically takes 1-7 days to run. When these items have expired their retention period, they're moved to the SubstrateHolds folder—another hidden folder that’s in every user mailbox to store "soft-deleted" items before they're permanently deleted.

Other existing policies can override this deletion process, though:

permanent deletion from the SubstrateHolds folder is always suspended if the mailbox is affected by another Copilot or Teams retention policy for the same location, Litigation Hold, delay hold, or if an eDiscovery hold is applied to the mailbox for legal or investigative reasons.

Microsoft lays out how this would work in an infographic:

Finally, Admins can view and manage stored data. They can also manually request hard deletion of certain user interactions.

Bing throws a wrench data retention policies

Because Copilot allows you to search the web, it leverages Microsoft’s search engine, Bing. Copilot will generate some search terms for you:

Web search queries might not contain all the words from a user’s prompt. They’re generally based off a few terms used to find relevant information on the web. However, they may still include some confidential data, depending on what the user included in the prompt. Queries sent to the Bing Search API by Copilot for Microsoft 365 are disassociated from the user ID or tenant ID.

This is important to note because

the search query, which is abstracted from the user’s prompt and grounding data, goes to the Bing Search API outside the boundary.

Microsoft Bing is a separate business from Microsoft 365 and data is managed independently of Microsoft 365. The use of Bing is covered by the Microsoft Services Agreement between each user and Microsoft, together with the Microsoft Privacy Statement. The Microsoft Products and Services Data Protection Addendum (DPA) doesn’t apply to the use of Bing.

The Microsoft Services Agreement does not mention retention, but it does refer to the Microsoft Privacy Statement, which says:

[F]or Bing search queries, we de-identify stored queries by removing the entirety of the IP address after 6 months, and cookie IDs and other cross-session identifiers that are used to identify a particular account or device after 18 months.

This doesn’t say anything about the underlying query itself, and actually implies indefinite retention. Especially since Bing queries are de-identified on the Microsoft 365 Copilot side, this doesn’t add any more protection. Additionally, the Privacy Statement notes that:

Microsoft retains personal data for as long as necessary to provide the products and fulfill the transactions you have requested, or for other legitimate purposes such as complying with our legal obligations, resolving disputes, and enforcing our agreements.

And then later:

Is there an automated control, such as in the Microsoft privacy dashboard, that enables the customer to access and delete the personal data at any time?

If there is not, a shortened data retention time will generally be adopted.

This is all quite confusing, and in the end, it would appear that you should assume indefinite retention of any Microsoft 365 Copilot-generated queries that leverage external web searches through Bing. This is just for the search terms, though, not the entire prompt. For the most part this won’t be a problem, but it could be an issue if you were private equity analyst researching a target company and entered a prompt like:

Is Acme Corp.’s revenue actually $15 million a year?

This could conceivably generate a search query such as:

Acme Corp. revenue $15 million

Private company revenue isn’t generally public information. Although this query would be secured by Microsoft, people working with especially sensitive data might be worried about search terms using it being exposed in a breach of Microsoft.

Admins, however, can block all users from making these types of queries. And individual users can control whether or not such queries are made for their individual sessions. So it might make sense to restrict web-based queries in certain situations. With that said:

Think about how many queries your team members have run in Google or Bing over the years. Much of that data probably contains confidential snippets and is still being retained by Google or Microsoft.

Disabling browsing will reduce performance by preventing the use of live web data because users will need copy and paste the desired context into the Copilot query manually.

They would probably just search using similar terms as Copilot itelf would, though, bringing you back to the same place.

This is all confusing to me because for the “vanilla” Copilot (not the Microsoft 365 version and formerly Bing Chat Enterprise), Microsoft stated unequivocally that “chat data is not saved.” I am not sure why they didn’t just plug Microsoft Copilot 365 into this existing architecture. Instead, it looks like they are leveraging the publicly-available Bing API for Microsoft 365. Or perhaps the unequivocal statement wasn’t correct to begin with.

(Update: 1 February 2024) Finally, I would be was quite curious to know how queries generated by ChatGPT and sent to Bing are retained. Especially if one were to disable Chat History, it seems possible that prompts triggering Bing queries might be partially retained (indefinitely) by Microsoft. I have submitted an inquiry to OpenAI on this note:

(Update: 1 February 2024) OpenAI’s response was underwhelming and implies ChatGPT queries to Bing are in fact retained similarly as with Microsoft 365 Copilot:

Please note that Open ai and Microsoft are separate entities, each with its own distinct data retention policies. Therefore, the duration for which data is retained may vary between the two services…For any questions regarding Microsoft retention policies, please reach out to them directly.

Other data governance considerations

In addition to the wrinkle with Bing, there are some additional things to consider:

EU data residency

If you are subject to European Union (EU) data residency requirements, it is possible to force Copilot-related data to stay within the EU’s geographical limits.

For European Union (EU) users, we have additional safeguards to comply with the EU Data Boundary. EU traffic stays within the EU Data Boundary while worldwide traffic can be sent to the EU and other countries or regions for LLM processing.

Data labelling and classification

Most Copilot responses thankfully are labelled with the highest sensitivity data label of all of the grounding (RAG) information that helped to compose the response. There is, however, an exception. Microsoft Teams meetings and chat sensitivity labels are not recognized by Copilot as of this writing.

Thus its possible that you could incorporate some chat data into a Copilot prompt and the response will not be labelled using the highest level of sensitivity for the data present.

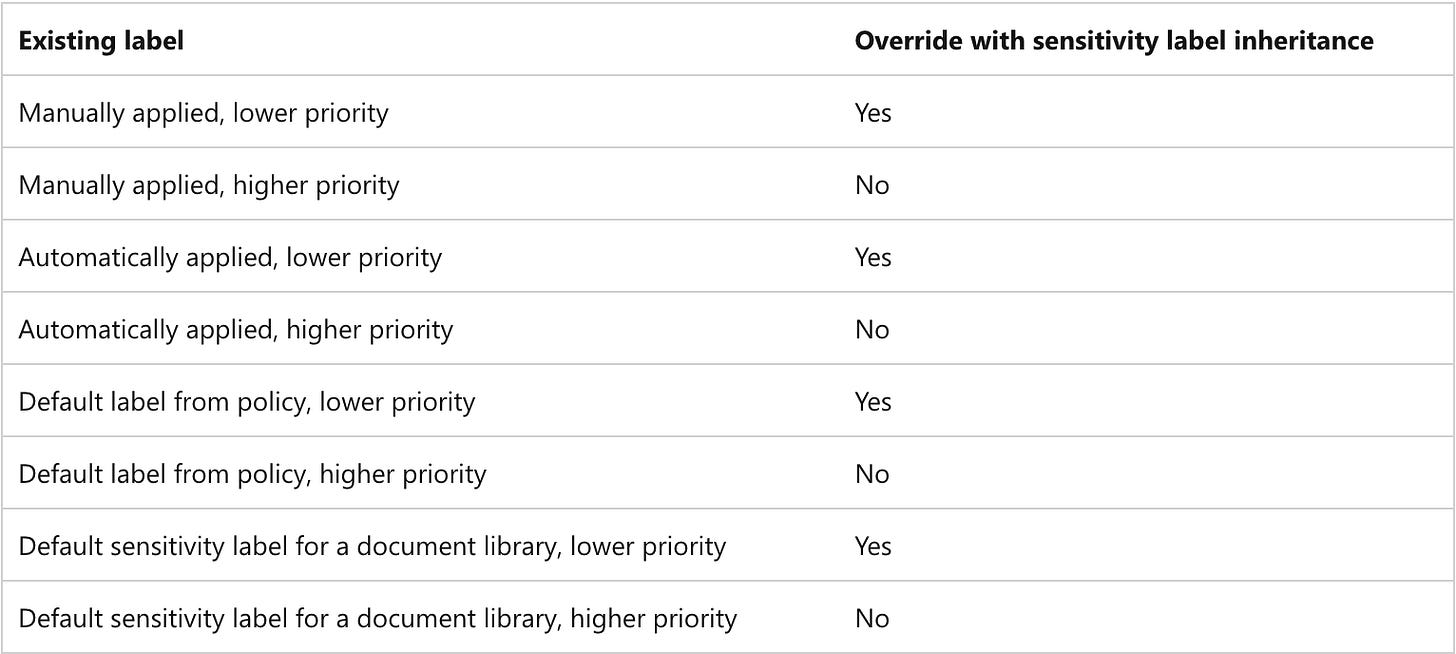

Additionally, there is a relatively complex set of rules governing how sensitivity label overrides for Copilot occur. Make sure you understand how these will be applied under realistic conditions.

Non-Microsoft 365 sources

Finally, it is possible for Copilot to ground responses in information sources that are not available in your organization’s Microsoft 365 tenant:

In addition to accessing Microsoft 365 content, Copilot can also use content from the specific file you're working on in the context of an Office app session, regardless of where that file is stored. For example, local storage, network shares, cloud storage, or a USB stick.

Copilot doesn’t train on prompts, responses, or RAG data, but that’s probably not the full story

This language is oddly specific:

Prompts, responses, and data accessed through Microsoft Graph aren’t used to train foundation LLMs, including those used by Microsoft Copilot for Microsoft 365.

To me, this implies that Microsoft is not using the content derived from Copilot interactions to train LLMs but it is almost certainly using metadata to train other models. For example, the fact that I prompted Copilot:

by typing “summarize this PowerPoint presentation in 5 bullet points” would not be used for training.

at 10:40 AM ET using a PowerPoint file, however, likely is being used to train predictive AI models.

Finally, Microsoft makes clear that

Copilot for Microsoft 365 uses Azure OpenAI services for processing, not OpenAI’s publicly available services.

Managing risk and reward with Microsoft 365 Copilot is a part-time job

For organizations that are power users of the Microsoft suite of tools, Copilot seems like a solid choice. They key will be having the right controls in place ahead of time, especially with it comes to data classification and user training.

Those who aren’t, however, might consider sticking with OpenAI products due to their relative simplicity (which comes with some costs in terms of the ability to control data retention).

Need help sorting this and other AI governance issues out?

Related LinkedIn post

This is brilliant. Thank you for sharing.