Risk management throughout the AI lifecycle

What to know from ISO/IEC 5338.

As the AI hype cycle nears (or passes) its peak, focus is turning to managing risk throughout the lifecycle of these systems. While this should have been top of mind from the start, better late than never.

Based on my conversations with a security leader for a B2B SaaS AI company, I took a closer look at one document that helps do this: ISO/IEC 5338.

Where does ISO/IEC 5338 fit in?

Just within the International Organization for Standardization (ISO) / International Electrotechnical Commission (IEC) universe, there are a lot of documents and standards to track when it comes to AI risk management:

ISO/IEC 42001: AI management (certifiable)

ISO/IEC 27001: Information security management (certifiable)

ISO/IEC 27701: Privacy information management (certifiable)

ISO/IEC 23894: Guidance for AI risk management (non-certifiable)

ISO/IEC 27090: Guidance for cybersecurity with AI (non-certifiable)

ISO/IEC 27091: Guidance for privacy protection with AI (non-certifiable)

And you can add one more to your list:

ISO/IEC 5338 (non-certifiable), which lays out AI system life cycle processes.

This document ties into the others because Control A6 from Annex A of ISO/IEC 42001 refers to the AI system life cycle and mentions ISO/IEC 5338. The latter document also complements and refers to ISO/IEC 23894.

What’s the most important part of ISO/IEC 5338 for AI risk management?

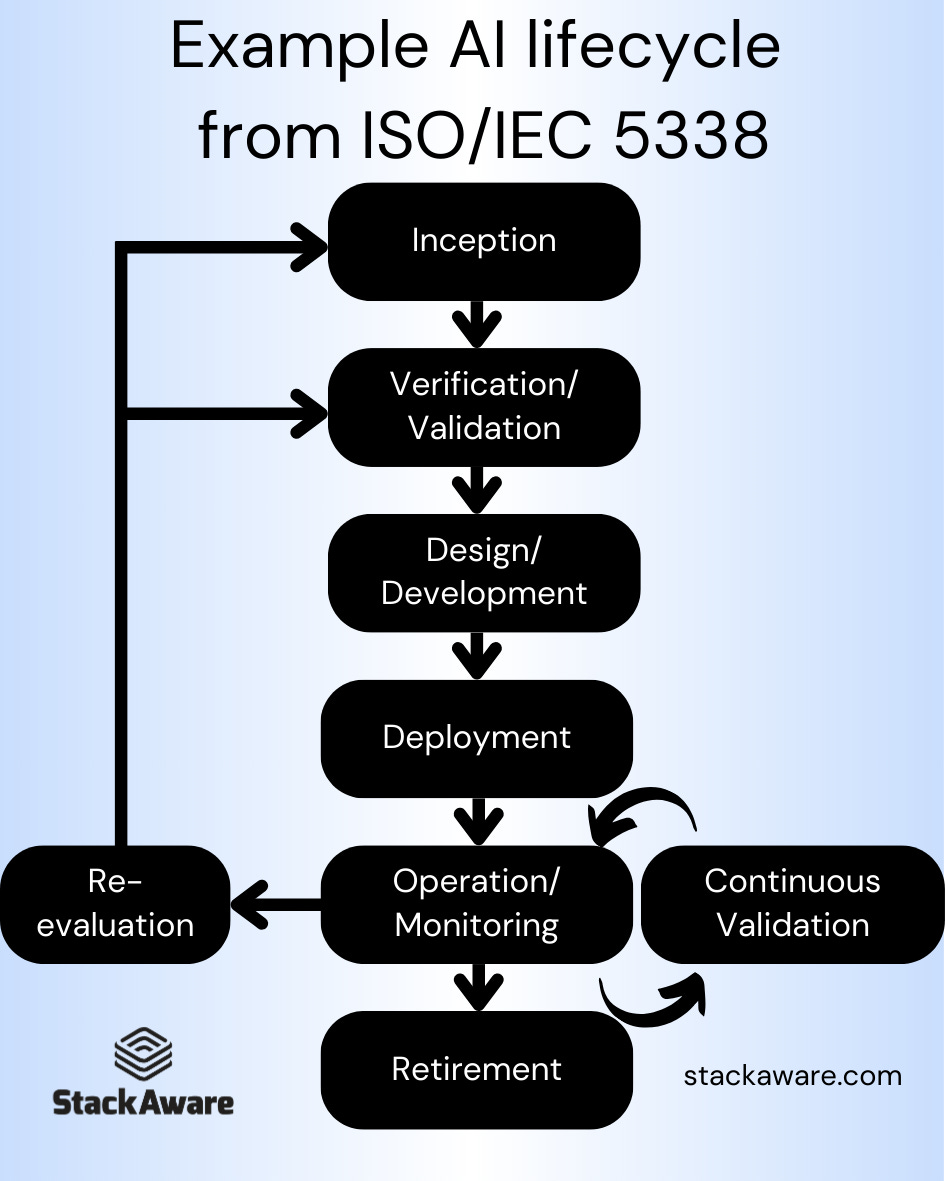

The example AI system life cycle, which I condense below:

While the document makes clear this setup is not “mandatory” or prescribed, it’s a useful framework for AI risk management decisions. The rest of the text lays out a set of processes that fit into this framework, which are useful from a project management perspective.

What are some AI risk management considerations at each stage of the ISO/IEC 5338 example lifecycle?

Inception

Why do I need a non-deterministic system?

What types of data will the system ingest?

What types of outputs will it create?

What is the sensitivity of this info?

Any regulatory requirements?

Any contractual ones?

Is this cost-effective?

Design and Development

What type of model will I use (linear regressor, neural net, etc.)?

Does system need to talk to other systems (making it an agent)?

What are the consequences of incorrect outputs?

Where am I getting the data from?

Will there be continuous training?

How / where will data be retained?

Do we need to moderate outputs?

Is system browsing the internet?

Verification and Validation

Confirm system meets your business requirements.

Consider external review (per NIST AI RMF).

Do red-teaming and penetration testing.

Do unit, integration, and UA testing

Deployment

Would deploying the system be within our risk appetite?

If not, who is signing off and what is the justification?

Train users and impacted parties.

Update shared security model.

Publish documentation.

Add to asset inventory.

Operation and Monitoring

Do we have a coordinated vulnerability disclosure program?

Do we have a whistleblower portal / hotline / email?

How are we tracking performance and model drift?

Continuous Validation

Is the system still meeting our business requirements?

If there is an incident or vulnerability, what do we do?

What are our legal disclosure requirements?

Should we disclose even more?

Do regular audits.

Re-evaluation

Has the system exceeded our risk appetite?

If an incident, do a root cause analysis.

Do we need to change policies?

Revamp procedures?

Retirement

Do we have a business need to retain the model or data? Legal?

Delete everything we don’t need, including backups.

Audit the deletion of these.

Need help managing risk throughout your full AI life cycle?

This list is just a sample of questions you’ll need to answer when:

Building

Deploying

Maintaining

AI systems.

StackAware keeps on top of all of the risks throughout the entire life cycle, from inception to retirement. So if you need help:

Great post!