Block AI tools from scraping your site in 3 minutes

How to tell bots and humans to buzz off (although they might not listen).

TL;DR

If you want to block major generative AI tools from scraping your web site:

Add the below to your robots.txt file1:

User-agent: ChatGPT-User

Disallow: /

User-agent: GPTBot

Disallow: /

User-agent: Google-Extended

Disallow: /

User-agent: ClaudeBot

Disallow: /

User-agent: Twitterbot

Disallow: /

User-agent: FacebookBot

Disallow: /

User-agent: CCBot

Disallow: /

User-agent: cohere-ai

Disallow: /

User-agent: omgilibot

Disallow: /

User-agent: omgili

Disallow: /

User-agent: Amazonbot

Disallow: /

User-agent: Applebot

Disallow: /

User-agent: PerplexityBot

Disallow: /

User-agent: YouBot

Disallow: /

User-agent: Bytespider

Disallow: /

User-agent: ImagesiftBot

Disallow: /

User-agent: Diffbot

Disallow: /

User-agent: Claude-Web

Disallow: /Under advisement of competent legal counsel, consider changing your site’s terms and conditions to forbid the undesired activity. Understand the potential limitations of such moves.

Why you should (or shouldn’t) block AI bots

If you publish only basic content on your web site and want it to be more likely to be referred to when users query ChatGPT or any generative AI tool, then scraping isn’t necessarily a problem.

If, however, you have concerns about your copyrighted material being used in these tools, you might consider blocking them by modifying your robots.txt file by adding the above restrictions. I combined information from several different articles together to build this consolidated list on how to block ChatGPT, Google Bard, Anthropic, Twitter/X, Meta, the Common Crawl bot, and several others.

You can also block these tools from specific portions of your site.

But know that this approach is not foolproof or airtight and will only stop constrained actors who respect robots.txt files (they may not even be required to - see below). Additionally, new generative AI tools are popping up all of the time so you will likely be playing “whack-a-mole” to block each new one as it appears. Check darkvisitors.com for updates (h/t to Dino Dunn for flagging this for me).

And there is a balance to be had here.

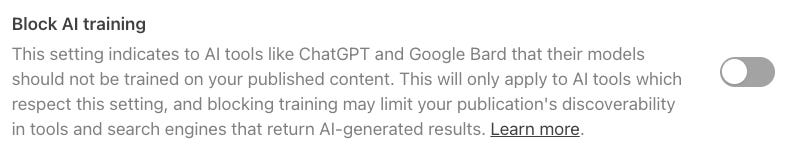

It’s likely you will be penalized in search result or social media rankings as a result of this type of blocking. Substack includes its own built-in blocking functionality, and you’ll note it is not enabled for Deploy Securely:

Add teeth with terms and conditions?

In addition to providing machine-readable instructions to bots, you can give human-readable ones as well. I am not an attorney, and this is not legal advice, but here is what the New York Times recently added to their terms of use. The first part simply defines terms like the “Site” and “Services”:

If you choose to use certain NYT products or services displaying or otherwise governed by these Terms of Service, including NYTimes.com (the “Site”), NYT’s mobile sites and applications, any of the features of the Site, including but not limited to RSS feeds, APIs, and Software (as defined below) and other downloads, and NYT’s Home Delivery service (collectively, the "Services"), you will be agreeing to abide by all of the terms and conditions of these Terms of Service between you and NYT.

The next block defines “Content” and “non-commercial use”:

The contents of the Services, including the Site, are intended for your personal, non-commercial use. All materials published or available on the Services (including, but not limited to text, photographs, images, illustrations, designs, audio clips, video clips, “look and feel,” metadata, data, or compilations, all also known as the “Content”) are protected by copyright, and owned or controlled by The New York Times Company or the party credited as the provider of the Content.

Further down, the terms of use ban using the Content to train AI models:

Non-commercial use does not include the use of Content without prior written consent from The New York Times Company in connection with: (1) the development of any software program, including, but not limited to, training a machine learning or artificial intelligence (AI) system.

These types of “browsewrap” agreements, where a site asserts you must abide by certain conditions in order to continue using it but doesn’t get any sort of affirmative consent from the user, do not appear very effective from a legal perspective.

According to the legal software provider Ironclad, in a sample of cases from 2020 only 14% of browsewrap agreements were found enforceable when tested in court.

Additionally, in 2020, the 9th Circuit Court of Appeals ruled that scraping public web sites does not violate the Computer Fraud and Abuse Act (CFAA), according to the CHIP Law Group.

In its late 2023 lawsuit against OpenAI and Microsoft for using the newspaper’s content to train AI models without permission, the New York Times alluded to its browsewrap terms (above) but did not specifically note either company’s alleged violation of them as a numbered count in the complaint. Rather, its case focused on copyright infringement, Digital Millennium Copyright Act (DMCA) violations, trademark dilution, and unfair competition.

Again, you should consult competent legal counsel regarding whether and how to modify your terms of use. But now you have some data about what others have done and how effective it has been in blocking scraping by AI vendors or those who provide them data.

Balancing risk and reward with AI

As with pretty much all technology and security decisions, how to address AI tools scraping your site is ultimately a business-driven one. Unfortunately it’s going to be just one of dozens, if not hundreds, you’ll need to address as the pace of artificial intelligence development accelerates.

Are you looking for a trusted parter and advisor to help you manage the:

Cybersecurity

Compliance

Privacy

issues stemming from expanding use of AI?

[Update July 30, 2024] A previous version of this list included the agents anthropic-ai and Claude-Web. Jason Koebler from 404Media reached out to me and flagged that the paper “Consent in Crisis: The Rapid Decline of the AI Data Commons,” suggested neither anthropic-ai nor Claude-Web are currently in use. In the article he subsequently published, Anthropic noted these were deprecated user agents, but it would still honor opt-out requests for them in robots.txt files.

[Update April 17, 2025] Although Anthropic apparently is not using Claude-Web, someone is according to Cloudflare. I have added it back to the blocklist.