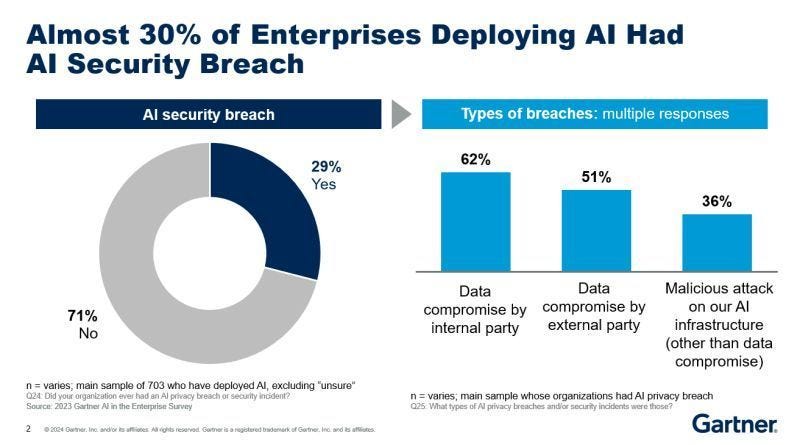

Almost 30% of enterprises deploying AI had an AI security breach, according to Gartner

(Accidental) insider threats look like the biggest risk.

Key takeaways

Data compromise by an internal party is the most common event. This isn’t defined but to me this strongly suggests unintended training of AI by an employee or similar. It could also refer to internal sensitive data generation.

Data compromise by an external party suggests that a 3rd (or greater) party was responsible for unintended training, but impacted to organization owning the data.

Since malicious attacks on AI infrastructure are separate, I interpret this as covering things like prompt injection, data poisoning, etc. It makes sense that these are relatively rarer than human error.

The sample size of 703 is big. And it does not include people who were “unsure” they had deployed AI. I assess that those who don’t know about their AI assets are more likely to have a breach than those who do.

Caveats

The question asked was “did your organization EVER had [sic] an AI privacy breach or security incident?” If the timeline is basically infinite, the number must be higher than if we were just looking at the last year.

Different organizations have different definitions for what is a security or privacy incident. So what might have been flagged for one didn't register for another.

As with all breach reporting, there is selection bias. It’s highly likely that organizations reporting a breach are MORE sophisticated. That's because they have the logging, monitoring, employee training to KNOW when they have had an incident.

“Deploying AI” is not defined. Does this mean self-hosted proprietary models running in IaaS? A single employee using ChatGPT? Not clear.

What I advise my clients

An AI governance program can address all three types of breaches identified. And the easiest thing to address would be data compromises by internal parties! This is about policies, procedures, and training.

Supply chain AI risk is real. Think about how you are monitoring your suppliers. Not just once, but continuously.

If you expose an AI tool to the internet (even if accidentally), someone is likely to notice and attack it. Red-teaming and guardrails are table stakes here.

A complete inventory is the basis for any (AI) security program. Start there - and get help if you need it!

Worried about AI data breaches?

StackAware helps AI-powered software, financial services, and healthcare companies manage risks related to:

Cybersecurity

Compliance

Privacy

Want to learn more about how we can secure your AI deployments?