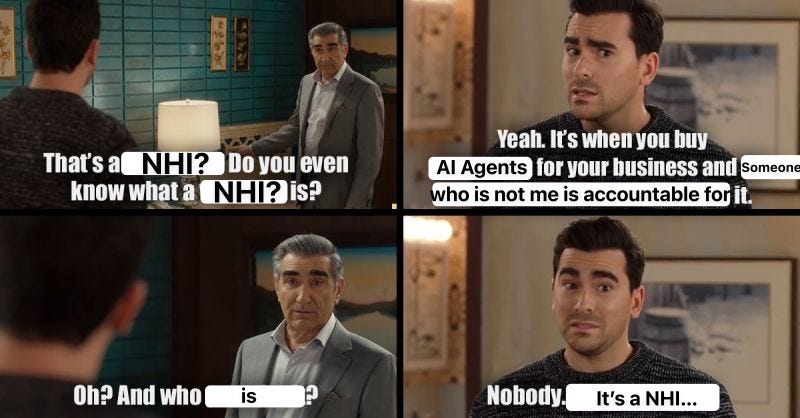

Non-human identities

And why we should stop talking about them.

Non-human identities (NHI) are the new buzzword...

…we should stop using.

Every system needs a human owner

Talking about NHIs implies systems do things “on their own” and removes - or attenuates - human accountability. Just like with service accounts, it’s tempting to say that “nobody” owns a given credential or key, but it’s the wrong approach.

Especially with the rise of agentic AI tools, like ChatGPT Operator or Claude's computer control, neither security nor business teams should want users to feel free from the consequences of their actions.

Courts have already made clear that blaming a “rogue” chatbot for its outputs is not an acceptable defense.

I’ve also seen security teams tie themselves in knots thinking about how to handle auditing for these types of situations, when there is:

An easy way to clarify agentic AI accountability

Treat credentials, application programming interface (API) keys, and anything else used by an AI agent like an asset or data set. Inventory them and assign owners. These owners should be cross-functional business leaders - the security team should only own security assets, nothing else.

Make sure every owner knows s/he is responsible for whatever happens to/with/because of these assets. Just because an agent is using a credential to do something without direct supervision does not mean “no one” is accountable.

Review company risk appetite (maybe increase it?) to address potential gains from autonomous systems.

Understand that with non-deterministic AI systems, bad things will happen due to no fault of the owner. StackAware created the Artificial Intelligence Risk Scoring System (AIRSS) to model these outcomes.

Nonetheless, hold owners accountable for how AI agents behave. This doesn't necessarily mean firing people for an AI agent's mistake, but they need account for the capabilities - and failure modes - of systems under their control.

Don’t let people avoid accountability as they deploy more powerful and independent systems

In my LinkedIn post on this topic, some objected to the concept of designating a single person as being accountable for a AI agent’s actions. They did so because the actions of an AI agent could be unknowable in advance.

In response, I would say life is full of uncertainty.

And if you aren’t sufficiently confident the benefits of deploying AI agents will outweigh the costs:

Don’t do it.

@walter thanks for the insight.

How do you feel about establishing a chain of accountability with roles such as sponsor -> owner -> operator?

E.g. in a trading system

Sponsor - Alice - Chief Investment Officer

Owner - Bob - Head of Algorithmic Trading

Operator - Charles - Senior Agent Operator

Then tack on a few rotating roles such as on.call@acme.com and incident.response@acme.com