Govern AI risk with the NIST RMF: accountability, communication, third parties, and more

Part 3 of a series on the National Institute of Standards and Technology's Artificial Intelligence Risk Management Framework.

Doing a teardown of the NIST AI RMF has been an incredibly illuminating experience.

The nearly limitless security, governance, compliance, and privacy implications of artificial intelligence are overwhelming.

And it’s great that NIST has started the process of building a comprehensive framework to address them.

But the RMF is just the bones. And they need a lot of meat on them!

You, understanding this, are now deep in the middle of a series doing just that. Zooming out, here’s what the series looks like

Part 1: Frame AI risk with the NIST RMF

Part 2: Govern AI risk with the NIST RMF: policies, procedures, and compliance

Part 3: Govern AI risk with the NIST RMF: accountability, communication, third parties and more (you are here)

Parts 4 and later: Map, Measure, and Manage AI risk with the RMF

In this section, I am going to continue breaking down the GOVERN function of the framework. Sub-bullets #2-6 of the original document aren’t nearly as large as #1 was, so I’ll cover them all in this post. And for those following along, there is an additional implementation playbook that has some helpful content.

2. Accountability

2.1 Roles and responsibilities are clear

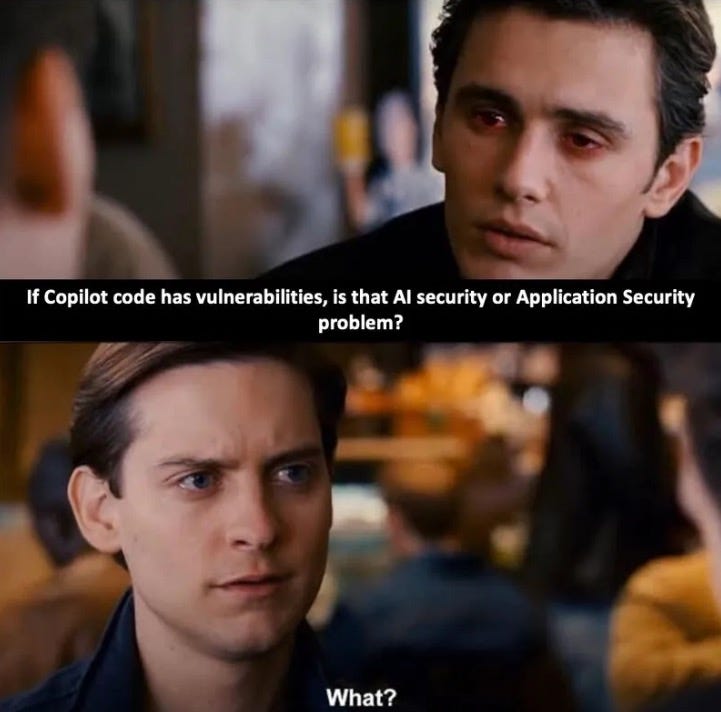

I think this meme from Pramod Gosavi sums things up nicely:

Basically, the key point here is that you need a clear methodology for describing risks and who is responsible for them. And this needs to happen in a mutually exclusive, completely exhaustive way.

Furthermore, a “team,” “committee,” or any other group cannot own any responsibility related to AI risk (or anything else for that matter). Organizations need a single accountable individual for all risk management decisions.

Check out the PRIDE model for details on exactly how to structure them.

2.2 Stakeholders trained

Another point that should be a no-brainer, but probably isn’t: you need to make sure your team and all stakeholders are trained on their roles and responsibilities when it comes to AI risk management.

If Jimmy on the marketing team is taking the entire CRM - including full names, email addresses, and a bunch of other personal information - and just dumping it into ChatGPT without a care or any data protection measures, that’s probably a failure of education on the part of the security and leadership teams.

At a minimum, make sure you have a policy in place and everyone in the organization knows the basic outlines of it.

Those who are regularly interacting with AI systems should fully understand what types of data to input, what not to, and whom to ask if they have questions.

2.3 Leadership accountability for decisions

This looks to be a separate point than that of the importance of “accountable AI.”

It speaks to the human leaders who make decisions about AI assuming the burden of their outcomes. And fully implementing bullet 2.1 is crucial to making sure this happens fairly and predictably.

Unfortunately, I am sure the AI-driven disasters of the future are going to bring with them huge amounts of finger-pointing, blame-shifting, and spinning. It has always seemed to me that in a crisis, the person who ultimately takes the blame is usually the one slowest to punish or fire his own subordinate(s).

AI aside, operating in this type of environment is incredibly corrosive to morale.

So, like in any security policy, having a relatively well-defined set of outcomes for infractions is key.

Furthermore, security leaders should ensure that a single business or mission owner signs “on the dotted line” for every risk decision (and absolutely refuse to do so themselves).

3. Diversity, inclusion, and equity

3.1 Team diversity

Looks like I got a spike in unsubscribes after my previous non-treatment of this issue, so I think I was correct that this is a no-win topic to address.

With that said, the AI RMF says something strange with respect to diversity - it includes “experience” and “expertise” as areas where this is desirable. While I agree that interdisciplinary perspectives can certainly help when managing AI risk, I don’t think that decision-making processes should necessarily take into account varying degrees of the same type of expertise.

For example, you probably aren’t going to ask the summer intern what the appropriate threshold for acceptable human deaths caused by an AI is.

Sometimes novices who don’t have the “curse of knowledge” will spot things experts cannot, but there is definitely a downside to having them weighing in with their opinions. This is especially true because of the Dunning-Kruger effect, which generally means those with the least value to offer are most likely to do so.

3.2 Role differentiation

This section was very unclear to me, even after I looked at the playbook entry for it. But the most interesting playbook requirement was to establish “policies and procedures defining human-AI configurations,” as in “configurations where AI systems are explicitly designated and treated as team members in primarily human teams.”

Thus it appears this requirement is to ensure organizations are explicit about where they are using AI. Many would appear to not doing this, such as by keeping it unclear as whether customers are chatting with an AI or a human.

This dynamic will become ever more widespread as AI becomes cheaper and more effective. I think companies and consumer will need to adapt. Having a banner warning or similar notification that humans and AIs might communicate interchangeably with you is a crude but mostly effective way to at least be transparent on this topic.

4. Risk communication

4.1 Safety-first mindset

If you have read Deploy Securely from the start, you’ll know I don’t agree with this perspective.

But if you haven’t, my view is that anyone who says “safety is the #1 priority” is being intellectually dishonest. Even worse is saying that safety is a “top priority.”

If AI safety were the #1 priority, we would shut everything down and go back to the “good old days.” Existential AI risk averted!

But obviously no one wants to do that, so here we find ourselves dealing with a certain amount of safety risk.

In the end, its all about balancing the various risks effectively and optimally to achieve the best outcome. Quantitative analysis - of which I have given some examples - is the best way to do this.

Deploying AI securely and safely, not just saying “safety first,” is what leaders should focus on.

4.2 Risk documentation and communication

This is closely-related to bullet points 2.1 and 2.3, with some additional recommendations when it comes to testing and communicating about AI risk.

Being transparent about the potential pitfalls of using the technology is important to using it ethically, and the NIST RMF recommends being clear about the AI system’s:

data provenance

transformations

augmentations

labels

dependencies

constraints

metadata

This is a pretty long list and I think many organizations would find it challenging or commercially non-viable to make all of this public. But it’s a good starting point and even a few of these data points can help other stakeholders make more informed risk decisions along the way.

4.3 Incident response

I was happy to see this bullet point here because incident response programs are often a laughable afterthought, even for security teams. And this leads to predictable chaos during emergencies.

Having a sound playbook for all data security incidents, not just AI ones, is absolutely key. Let me know if this is a digital product you would be interested in.

Just like with “traditional” cybersecurity incidents, responding to an AI incident requires not just technical but operational, legal, and public relations aspects. Organizations should incorporate all of these into their planning.

Furthermore, NIST encourages reporting of AI-related incidents to various sources, such as the AI Incident Database. This looks to be more focused on “bad outcomes” of AI, rather than cybersecurity-related incidents.

5. Engagement and feedback

5.1 Collect and integrate multi-disciplinary input

This is a pretty broad suggestion that those using AI systems collect qualitative input through a variety of means including:

User interviews and research

Focus-groups

Bug bounty / Coordinated vulnerability disclosure (CVD) programs

Just like any good software product or system, you’ll want to make sure you have a method for consuming and prioritizing feedback from users. Many organizations will create different buckets for different types of work to be done (functional requirements in Jira, security bugs in a vulnerability management tool, etc.), but in my product management experience, I have found a unified backlog to be key.

And hey, you might even be able to use AI to help you prioritize it!

5.2 Implement feedback

Even after reading through the playbook, it was hard to tell how this was different than 5.1. The main point of divergence appears to be that this sub-bullet is focused on the decision-making and implementation side of things while the first sub-bullet is just focused on collection.

6. Third party risk

6.1 Policies and procedures to assess and manage risk from third parties

There is basically no way to avoid third-party risk in the modern economy unless you want to build 100% of your own hardware and write 100% your own software.

So being able to manage such risk in a coherent manner is vital. And this is no different with AI.

Some older issues of Deploy Securely, which I think have aged quite well and are completely applicable to AI systems, give a framework for how to do just that:

3rd (and greater) party code risk: managing known vulnerabilities to secure critical applications

Known unknowns: managing risk from vulnerabilities in 3rd (and greater) party software and systems

Security questionnaires: worth the trouble?

External audits: a better solution for 3rd (and greater) party risk management?

Technical due diligence for identifying cybersecurity risk in external parties

What is the difference between supply chain, third-party, and vendor risk management?

Sub-bullet 6.1 also explicitly calls out intellectual property (IP) infringement. While a good thought, it is not clear at all how the current IP regime will weather the storm of generative AI.

Vaguely warning of “IP infringement risk” might make you look smart but I have yet to see anyone provide any sort of consistent framework for how to navigate it. That’s aside from “don’t use AI,” which I’m pretty sure you aren’t going to do if you are reading this post.

Realistically, organizations will need to assume some risk here to harness AI tools, while identifying “hot button” IP issues (namely those involving litigious players) to avoid.

6.2 Incident response for “high-risk” third-party AI systems

This is closely related to 4.3, except with the additional wrinkle of involving third-party systems. As I mentioned, though, that is going to be applicable for basically any AI tool (or piece of software) you use.

As this section points out, you’ll also want a plan to address non-security issues like inaccuracy in your third-party contingency planning.

Conclusion

That’s all for this issue. In the next one, I’ll go deep on the MAP function, continuing this series.

In the meantime, do you need help in setting up your AI governance or security program?